Explore our expert-made templates & start with the right one for you.

Using Snowflake in Data Lake Architecture

Trying to understand where Snowflake fits into your cloud infrastructure? Check out our 2022 benchmark report to learn how to best design your data pipeline in order to get the best latency and price/performance from your cloud data warehouse. Access the report for free here

Use Snowflake WITH a data lake? Use Snowflake AS a data lake? Use Snowflake INSTEAD OF a data lake? It’s easy to feel a bit adrift as to whether and how to plug Snowflake into your cloud data stack. Let’s look, simply and clearly, at where Snowflake best fits.

Is Snowflake a Data Lake?

Over the past five years, Snowflake has grown from a virtual unknown to a vendor with thousands of customers. Businesses adopted Snowflake either as part of a migration from on-premises enterprise data warehouses (such as Teradata) or as a more elastically-scalable and easier-to-manage alternative to an existing cloud data warehouse (such as Amazon Redshift or Google BigQuery).

Because of the sizable investment Snowflake often represents, some data teams consider it for every use case. This leads organizations inevitably to compare data lakes to Snowflake and other cloud data warehouses. (The data lake is the other data platform to emerge over the past decade, first as on-premises Hadoop installations and now in the cloud, built on top of raw object storage.)

Other Snowflake Resources to Explore

- Learn about the latest changes to Snowpipe Streaming

- Discover how you can perform upserts with Snowpipe in Snowflake

- Compare ingestion methods when writing Kafka data to Snowflake

Snowflake: Data lake or data warehouse?

We detail the differences between a warehouse and a data lake elsewhere, but to summarize:

- Data lakes offer a cheap object store of raw data and rely on external query tools to analyze large data sets using very affordable compute resources. Data lakes, since they are accessing a file system rather than a structured format, are not highly performant without optimization. But once optimized they can be extremely cost effective, especially at scale. They also are well-equipped to handle streaming data.

- Data warehouses store structured data in a proprietary format for analytics access through a tightly-coupled query layer. Compute speed is fast compared to that of an unoptimized data lake, but also an order of magnitude more expensive.

So, where does Snowflake fall?

As with other data warehouses, Snowflake requires a proprietary data format, works with structured and semi-structured (but not unstructured) data, and requires its own query engine. But it differs in two key aspects from the traditional data warehouse:

- It’s only offered in the cloud

- It separates storage from an elastic compute layer

These improvements initially distinguished Snowflake in the marketplace from Teradata and Redshift, though each has since attempted to match these attributes – Teradata with its cloud Vantage service and Amazon with Redshift RA, which separates storage and compute. As a result, each of these services now shares these two characteristics with cloud data lakes, which may contribute to the flurry of confusion regarding when to use which.

Bottom line: While Snowflake offers some decoupling of storage from compute, it cannot be considered a data lake due its reliance on proprietary data formats and structured storage. A data lake is built around broad access to data and the ability to choose between various compute, query, and data management tools; despite all of Snowflake’s advantages as a data warehouse, it does not offer these features.

Where to put your data is often a question of cost

So Snowflake isn’t really a data lake. But is there any reason not to just store all your data on it, and rely on its capabilities to crunch data quickly using SQL? One reason you might want to consider is cost.

Cost is of course an important factor to consider when deciding how to use various analytics platforms. Using Snowflake to run complex queries on high volume data, at high velocity, can add significantly to cost.

- Snowflake charges based on the amount of time a virtual data warehouse (VDW) runs, plus the size of the VDW (number of cores) and the feature set subscribed to (Standard, Enterprise, or Business-Critical). For a given VDW the rate is the same, regardless of the load on the VDW. This differs from data lake platforms that offer spot pricing; a smaller load can be addressed by a cheaper instance.

- Snowflake only processes data in its proprietary format, and transforming data for ingestion by Snowflake can be expensive in and of itself. Because ingesting streaming data creates a continuous load, it can keep the Snowflake VDW meter running 24/7. Since Snowflake doesn’t charge differently for a 5% or 80% load on a given virtual data warehouse size, these costs can become significant.

- Snowflake compute is an order of magnitude more expensive than running the same job on a data lake.

So there is a real risk of a runaway cost scenario occurring with Snowflake. When you realize you may have too much data stored in Snowflake it can be even more expensive to get the data out, because of the need to transform it into another format.

There could be a similar scenario involving your data scientists, who may repeatedly analyze the same or similar sets of data as they experiment with different models and test different hypotheses. That’s highly compute-intensive. In addition, they must be able to plug into the ML or AI tools of their choice, and not be locked into a single technology simply because a proprietary data format demands it.

For these reasons, it can be inefficient and expensive to rely on Snowflake for all data analytics needs. It may make better business sense to supplement Snowflake with a data lake.

Compare Snowflake to Databricks

The competition between Snowflake and Databricks has heated up recently. How does Snowflake’s approach compare to Databricks’s self-proclaimed Lakehouse tool, and how stark are the differences between the two really? Check out our comparison between Databricks and Snowflake to find out.

Snowflake + Optimized Cloud Data Lake = Flexible, Affordable Analytics

All of the above is not meant to disparage Snowflake or to imply it shouldn’t be part of your overall solution. Snowflake is a very useful data warehouse and should definitely be considered as a way to provide fast, predictable analytics performance. However, by incorporating it as part of an open, optimized data lake architecture, you can ensure you get all (or most) of the benefits of Snowflake, while still keeping your cloud costs under control.

The use of an optimized data lake plus a cloud data warehouse like Snowflake gives companies the flexibility to apply different patterns to different use cases based on cost and performance requirements.

- You can leverage a data lake’s inexpensive storage and keep all of your data – not just recent or structured data.

- Data transformation and preparation will likely be much cheaper in a data lake than in Snowflake, especially on streaming data.

- You aren’t limited in your choice of query tools. You can query the data lake directly or output to Snowflake as needed. You retain maximum flexibility and agility to work with the rest of your data as you please through other tools such as search engines, time-series databases, or ML/AI engines.

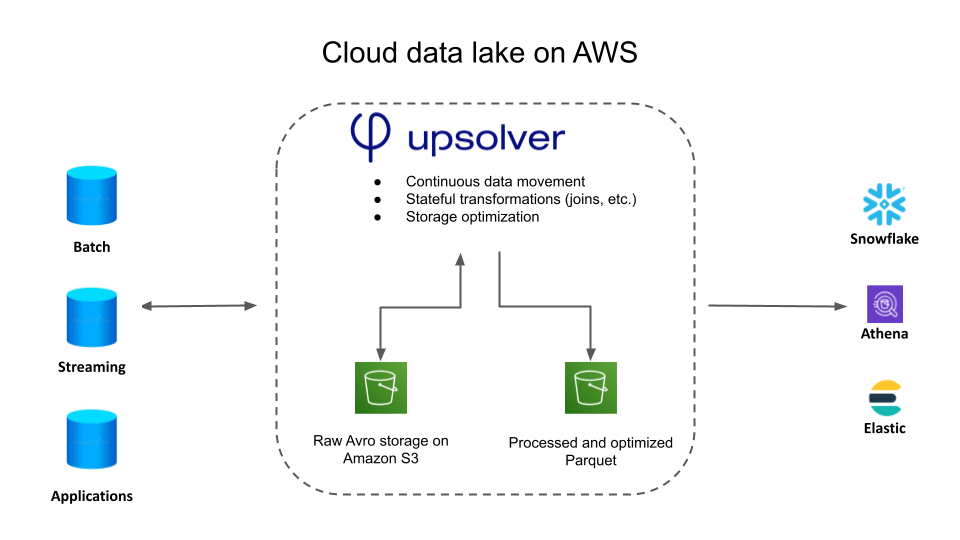

Making your data lake analytics-ready with Upsolver

Manually preparing data in a data lake, using tools such as AWS Glue or Apache Spark, is usually resource-intensive. This is where Upsolver’s data lake engineering platform comes into play. Upsolver provides a low-code, self-service, optimized compute layer on your data lake that makes your data lake performant so it can serve as a cost-effective analytics store.

Upsolver incorporates data lake engineering best practices to make processing efficient, automating important but time-consuming data pipeline work that every data lake requires to perform well. This includes:

- Converting data to query-efficient columnar formats such as Apache Parquet, rather than requiring engines to query raw data.

- Compacting files continuously to ensure performance by avoiding the “small files problem.”

- Partitioning data appropriately to speed query response.

Upsolver leverages low-cost compute options such as AWS Spot EC2 instances whenever possible to reduce the cost of queries. This can reduce compute costs by 10X over standard EC2, which itself is much less expensive than a data warehouse.

Upsolver correctly handles table UPSERTS, which means you can continuously load tables from streaming data that remain up to date as data and even schema changes.

Use Upsolver to normalize, filter, aggregate, and join data to your liking, via a visual SQL IDE to create the transformations. Then run these transformations directly on the data lake, before you write it to Snowflake (including joins, aggregations, enrichments, and so on) or query it as an external table from Snowflake’s SQL query engine.

Combining Snowflake with an Upsolver-charged data lake gives you the flexibility to run processing where it makes the most sense in terms of cost and analytics tooling choices.

You can even use Upsolver to continuously transform and stream data directly to Snowflake.

How Best to Build a Real-Time Streaming Architecture Using Snowflake, Data Lake Storage, and Upsolver on AWS

First, and most important, be clear on which of your data streams must go into Snowflake, and which can simply be stored in raw format in the lake for other purposes. (Remember not to store very old data in Snowflake; Athena is a much better tool for querying large data sets.) Then design your architecture such that transformed streamed data is outputted automatically into Snowflake.

In this reference architecture, Snowflake is just one of several data consumers. The data is optimized and reduced (sometimes significantly) for each consumer. In some cases the prepared data is most economically queried on the data lake by a query engine like Athena; in others it is output to a specialized data store:

- search or log analytics (Elasticsearch)

- OLAP querying (Snowflake)

- graph analytics (Neptune)

With an open data lake architecture such as this, you keep a single version of truth in the data lake as raw data, and on top of that you can refine and distribute the data for specific purposes.

- Upsolver ingests streams of data and stores them in raw format in a data lake.

- Data that’s intended for OLAP or ad hoc queries is prepped, cleaned, aggregated, and formatted for direct query by Athena and/or output to Snowflake to ingest and process.

- As requests arrive from other analytics services or stores, the data is prepared and optimized and then delivered to the corresponding service.

Watch how it works in this recent video:

Choose the Best Tool for Each Workload

Snowflake is a new kind of data warehouse. It offers advantages over traditional approaches through its consumption-based pricing model and its separation of storage and compute. It is not, however, the best tool for every job, especially when cost is a concern, such as running continuous jobs on streaming data. It is also a closed system that reduces long-term analytics flexibility.

Data lakes are open, more scalable, cost-effective, and capable of supporting a wider range of business cases. The challenge of data lakes has always been their data engineering complexity. But now you can use a no-code data lake engineering platform like Upsolver to quickly build pipelines to ingest, prepare, and format a virtually limitless amount of streaming data, which you can then query directly or output to Snowflake – the best of both worlds.

Additional Resources and Next Steps

- Get our benchmark report to learn how data processing in Snowflake impacts performance.

- Schedule a demo of Upsolver: https://www.upsolver.com/schedule-demo

- Try SQLake for free (early access). SQLake is Upsolver’s newest offering. It lets you build and run reliable data pipelines on streaming and batch data via an all-SQL experience. Try it for free. No credit card required.