Explore our expert-made templates & start with the right one for you.

How to Query S3 Data Using Amazon Athena (Tutorial)

This article is part of our Amazon Athena resource bundle, which comes with a host of additional articles, ebooks, and videos to cover everything you always wanted to know about Athena. If you want everything in one place, sign up for FREE access to our Athena resource center.

We’re massive fans of Amazon Athena – not just because Upsolver is the only official partner on the Athena webpage on AWS, but also because we see the fascinating, versatile ways in which customers use Athena for business intelligence, ad hoc reporting, and data science.

In this guide, we’ll cover the basics of querying Amazon S3 using Athena, including some handy links to previous resources we’ve published on this topic, and end with a quick example and tutorial on querying S3 – specifically Apache Parquet files – as Athena tables.

Athena, S3, and How They Work Together

In a typical AWS data lake architecture, S3 and Athena are two services that go together like a horse and carriage – with S3 acting as a near-infinite storage layer that allows organizations to collect and retain all of the data they generate, and Athena providing the means to query the data and curate structured datasets for analytical processing.

Benefits of using AWS Athena when your data is stored on S3

The key advantage of using Athena is that it can read data directly from S3, using regular SQL. This helps bridge the gap between S3 object storage – which is schemaless and semi-structured – and the needs of analytics users who want to run regular SQL queries on the data (although, as we cover below, data preparation is still required).

Another advantage of Athena is that it is serverless, with compute resources provisioned by AWS on-demand and as required to answer a specific query. This makes life simpler for data engineers as there is no infrastructure to manage, unlike traditional data warehouses such as Amazon Redshift (read more about the differences between Athena and Redshift).

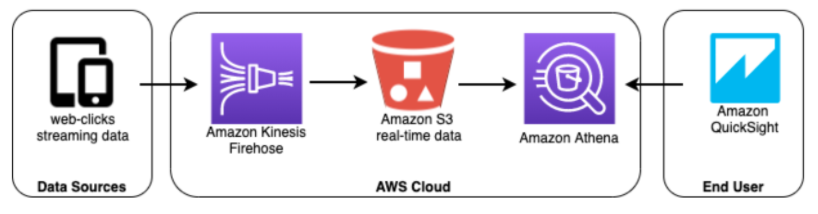

Athena S3 – Reference Architecture

Companies that collect large volumes clickstream data from websites or applications would often choose to store this data on S3 object storage to avoid the high costs of data warehouse storage. In these cases, Athena can provide a hassle-free way to query the data. The results of the Athena-S3 SQL queries can then be read by QuickSight or other visualization tools, which will provide BI and dashboarding to end users.

What are Athena tables?

Since Athena is based on regular SQL, it supports DDL statements and has the concept of “tables.” These behave similarly to tables in a relational database. However, unlike a traditional database, Athena uses schema-on-read rather than schema-on-write – meaning a schema is projected on the data when a query is run, rather than when the data is ingested into the database (since Athena doesn’t ingest the data but rather reads it, unmodified, from S3).

Hence, when we talk about “tables” in Athena we are actually talking about a logical namespace that describes the schema and the location where data is stored on S3. Athena uses the Apache Hive metastore to define tables and create databases, but doesn’t store any data, in accordance with its serverless architecture.

You can read more about Athena tables in the AWS documentation, or peruse our previous blog post covering the differences between Amazon Athena and traditional databases.

Optimizing the S3 Storage Layer for Improved Performance in Athena

While Athena is serverless and easy to use due to its reliance on regular SQL, its performance can still be impacted by the way data is stored in the underlying Amazon S3 bucket. While this likely isn’t material if you’re just querying a few thousand files or a few hundred megabytes, at larger scales it is essential to preprocess the data to ensure Athena performs well and also to control costs.

To provide optimal performance, implement data lake best practices including storing data in columnar formats such as Apache Parquet, rather than as raw CSV and JSON; merging small files in a process known as compaction; and data partitioning. We’ve covered all of these topics in some depth before, so go ahead and click on the links to explore further. You can also check out everything we’ve ever written about Amazon Athena.

Upsolver SQLake makes building a pipeline as easy as writing a SQL query. SQLake is a declarative SQL pipeline platform for both batch and streaming data for cloud data lakes. It automates tedious data preparation tasks such as orchestration and file system optimization, which otherwise would need to be coded manually using tools such as AWS Glue or Apache Spark.

Regardless, whether you go self-service or code-intensive, it’s crucial to keep your S3 storage “tidy” to make it easier for Athena and other query engines to scan and retrieve data from your data lake.

Example: Creating an Athena table from Apache Parquet on S3

So how does all of this work in practice? Let’s look at a real-life example of how SQLake enables you to copy data from Amazon S3 to a staging table in SQL Lake, then transform it based on your business logic and query your analytics-ready table with Athena – all using only familiar SQL.

(You can also just try it for yourself right now, using our sample data or your own data. Sign up for SQLake for free for 30 days – no credit card required.)

Video Tutorials and Additional Resources

Here’s another example – a more involved scenario demonstrating how to use SQLake to build a simple data pipeline that takes raw data from S3 and Apache Kafka and outputs analytics-ready data into Snowflake:

Want to learn more about Amazon Athena?

- Check out our complete guide to Athena performance

- Watch our webinar on ETL for Amazon Athena

- Visit our Athena solution space

Want to learn more about SQLake?

- Learn about what SQLake can do for you

- Sign up for an unlimited 30-day free trial. No credit card required.

- Visit the SQLake Builders Hub, where you can browse our pipeline templates and consult an assortment of how-to guides, technical blogs, and product documentation.

- If you have any questions, or wish to discuss this integration or explore other use cases, start the conversation in our Upsolver Community Slack channel.