Explore our expert-made templates & start with the right one for you.

Solving PipelineOps: Automating Data Pipelines to Get More From Data Engineering

How to Turn Data Pipeline Engineering Into a Valuable Asset

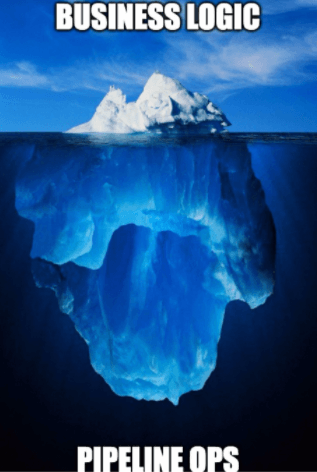

Most data engineering job time still consists of manual pipeline engineering – not adding business value. To examine why, let’s deconstruct the development lifecycle of a pipeline.

The Typical Data Pipeline Tasks

Every data pipeline effort consists of:

- Designing the business logic (transformations). Defining the characteristics of the analytics-ready output your users or application require.

- Pipeline Operations. Implementing the nitty-gritty engineering and DevOps work required for running the business logic reliably and affordably in production.

Human involvement in designing transformations is unavoidable; users must outline their use case (in code or SQL) and have discussions around the trade-offs of using different logic to achieve their desired end state. For example, if you work toward per-minute granularity instead of hourly, will it query fast enough, and will it be too expensive? If you ignore that level of granularity, will the lack of data freshness lead to poor decision making? A machine shouldn’t make these decisions – at least not given the current state of AI.

But when it comes to PipelineOps, the script is flipped. Even though these operations are repeatable, in most systems humans are asked to execute them – and these activities consume 90% of a data engineer’s time. And that’s not even considering that the skills required to engineer a pipeline in code are hard to find and in high demand.

So it’s obvious that automating PipelineOps is a huge opportunity to make data engineers more productive and businesses more effective at analytics. It promises an order of magnitude increase in data engineering capacity. Also, if you remove PipelineOps from the equation via automation, you’re left with the business logic, which can be defined by data consumers. This aligns with the vision of the data mesh, which advocates decentralizing data engineering to the data domains, who are the experts on the data semantics – the business meaning of the data. Also, automating PipelineOps reduces the chance of human error being introduced into the pipeline, and if done right can institute best practices for cost-efficient processing.

In short, the PipelineOps work is all the “subsurface” work that can sink your data project if it’s not done right. And it’s very hard to to right – at least manually.

The Two Sides of PipelineOps

You can separate PipelineOps into two sets of activities:

- The data side

- The DevOps side

The data side of PipelineOps

Schema detection and evolution

Data pipelines run on raw source data which, when you get right down to it, is usually just a bunch of files. When dealing with big data sources, developers typically don’t have certainty around some of the key properties of the source data, such as the exact schema or the “shape” of the data (volume, change velocity, empty values, distribution, initial timestamp of the field in the data, and so on). So they write code that runs on data samples to assess schema and statistics. But even then they only get a snapshot of that point in time. So developing data pipelines without an extensive schema detection effort is the PipelineOps equivalent of driving blind.

Execution plan and orchestration (DAGs)

Data pipelines are often executed via multiple dependent steps and staging tables, usually designed as a directed acyclic graph (DAG) in a tool like Apache Airflow. Crafting a good execution plan takes time and experience. Running the execution plan as a continuous process (orchestration) requires constant data engineering fiddling. Every change to the data pipelines (such as schema evolution) in turn can require changes across all steps of the DAG plus substantial testing, as manual errors can cause pipeline downtime, data loss, and issues with downstream data quality.

Data lake files and metadata management

There is broad market consensus that a cloud data lake based on object storage is the best way to deal with big data. However, when using a data lake (as opposed to a database or data warehouse), you spread storage (AWS S3, Azure ADLS), compute (EC2, Azure Cloud Compute), and metadata (AWS Glue Catalog, Hive Metastore) across multiple cloud services. That means data engineers must spend time integrating these services into a home-grown data pipeline platform. Any mistake that creates inconsistencies between services is equal to corrupted data.

Also, a data lake is based on an append-only file system. That’s the benefit of a data lake, which allows for end-to-end full lineage and provenance as well as the ability to replay data pipelines. But it also leads to data engineering work to efficiently output the state of the data at a point in time. There are many best practices involved in managing a data lake to ensure consistency and high performance; data engineers’ time is thus consumed by low-level file system code. Compaction – the process of turning numerous small files into big files that are more efficient to process – is a well-known example of a high value operation that improves query performance but is very hard to do well.

Pipeline state management

Stateful pipelines are necessary for blending multiple dynamic sources of data into a single output dataset. The ability to combine streaming data with big historical data is as common for analytics organizations as using a JOIN command in a database. However, while the processing state can become very large it must be available for pipelines at very low latency.

At high scale, it’s common to use an external key-value store (such as Aerospike, Redis, Cassandra, RocksDB, or AWS DynamoDB) for state management. Of course, this means an additional system to license and manage, yet more IT overhead/cost, and additional pipelines that require their own maintenance.

Even at modest scale, state management requires low-level tuning such as checkpointing and partitioning. Bottom line: both cases require substantial data engineering attention and expertise.

The DevOps side of PipelineOps

When data pipelines begin production, the work does not end. You have regular data engineering maintenance to perform on each pipeline, and this load grows as you build a portfolio of dozens or hundreds of pipelines. This means any tech debt you accumulate through sub-optimal pipeline development practices will multiply across all your pipelines in production.

Monitoring

A data pipeline is a production process and it requires monitoring. For each data pipeline effort you must create metrics that are reported to the central monitoring system that the ops team uses.

Infrastructure management, scaling, and healing

Data pipelines often run on high and volatile volumes of data, which means they are usually deployed onto distributed processing systems across many instances. Data engineering and/or DevOps must manage that infrastructure, ensure healing on errors, upgrade software versions, and fiddle with automated scaling policies to optimize cost and latency.

Data freshness

As sure as night follows day, data consumers want their data to be fresher than it was before. SLAs move from daily/weekly to per hour/per minute, and the pressure on operational processes grows. In some way this is the data equivalent of the manufacturing just-in-time (JIT) revolution in the ’90s, which placed more pressure on all parts of the supply chain.

Schema evolution

Big data is often controlled by distant “others” who know nothing of the downstream pain they will cause by surprising you with a new field, or a type or semantic change to an existing field. You must write code that checks the schema as the source data arrives so you avoid data loss or impacting the integrity of the pipeline output.

Changing consumption requirements

Besides simply wanting their data faster, downstream users (including applications) build their initial requirements based on current business conditions. As these change so must the data pipeline on which their work depends. While these changes usually are expressed as modifications to the business logic, PipelineOps work can still be required if the change affects cost or latency.

Turning PipelineOps from a Challenge to an Opportunity

As you can see, when dealing with modern data, there is a tremendous amount of unavoidable “below the surface” code-intensive work to turn the business logic that describes the desired output of a data pipeline into a production process that performs reliably and economically at scale.

Upsolver was founded to eliminate this often tedious low-level PipelineOps engineering. Data engineers can concentrate on higher-order problems and the businesses they support can get reliable, high-performance data pipelines delivered faster. We built our SQL data pipeline platform to act as data management infrastructure for developing, executing, and managing pipelines on cloud data lakes. It requires only SQL knowledge to write the business logic and fully automates PipelineOps engineering.

Upsolver is an integrated data pipeline platform. Its visual IDE enables data engineers to build pipelines using SQL – including extensions to handle operations on streaming data, such as sliding windows and stateful joins. Then they can output data to suit their product specs. And it’s easy to create SQL-based pipeline-as-code templates that enforce corporate governance standards around formatting, semantic alignment, and PII handling and can be deployed programmatically.

Upsolver simplifies at-scale ingestion by providing no-code connectors for common file, table, and stream sources. Upsolver pipelines currently handle event flows in the millions of events per second. When you connect a source Upsolver provides the schema (even for nested data) and data statistics to help you with data modeling. It continues to monitor this source for changes to schema over time to simplify dealing with schema evolution.

Upsolver delivers Parquet-based tables that are queryable from engines like Amazon Athena, Amazon Redshift Spectrum, Starburst, Presto, and Dremio. Upsolver automates the “ugly plumbing” of table management and optimization. Analytics-ready data can also be continuously output to external systems such as a cloud data warehouse, database, or stream processor.

Upsolver implements data lake best practices automatically, which simplifies your pipeline architecture greatly. You declare your desired pipeline outputs in SQL and Upsolver does the rest. There’s no need for orchestration tools such as Airflow or dbt, or a key-value store to handle state management, or to manually manage a data catalog (AWS Glue Data Catalog or the Apache Hive Metastore).

Because it’s a managed cloud-native service with a purpose-built processing engine, it scales automatically and affordably based on load and your business rules. Because it runs as a managed service in your cloud account (VPC) your data never leaves your ownership. Data-centric companies like IronSource and SimilarWeb run hundreds of terabytes to petabytes a day of streaming data through Upsolver pipelines today.

So if you want to be king of the world, you need to get PipelineOps under control. Otherwise your data engineering team, and your analytics efforts, will struggle to stay afloat.

Learn More About Automating Data Pipeline Development

How to build, scale, and future-proof your data pipeline architecture.

Commonly-overlooked factors when building a data pipeline.

Try SQLake for free (early access). SQLake is Upsolver’s newest offering. It lets you build and run reliable data pipelines on streaming and batch data via an all-SQL experience. Try it for free. No credit card required.

If you have any questions, or wish to discuss this integration or explore other use cases, start the conversation in our Upsolver Community Slack channel.