Explore our expert-made templates & start with the right one for you.

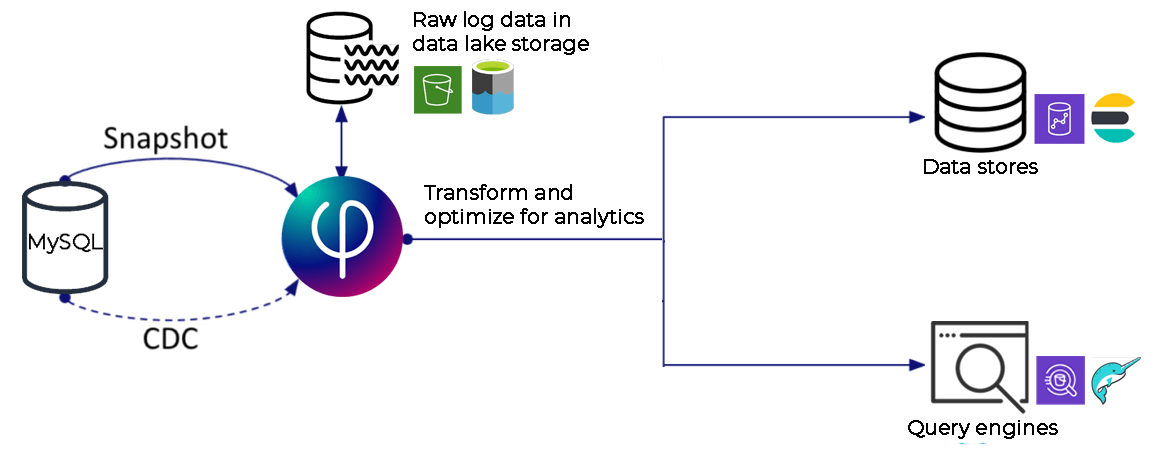

Use SQL Data Pipelines to Unlock the Value of your Cloud Data Lake

Cloud data lakes are reliable, scalable and affordable. However, the complexity of writing code and orchestrating pipelines on raw object storage have held data lakes back from having the impact they should.

Upsolver uses a declarative approach based on SQL transformations to eliminates those barriers, so you get simplicity combined with data lake power and affordability.

Combine Streaming and Historical Batch Data for Real-time Analytics

Continuously deliver up-to-the-minute fresh, analytics-ready tables to query engines, cloud data warehouses and streaming platforms. Even with nested, semi-structured and array data. No more stale analytics based on nightly or weekly jobs.

Reduce Cost and Effort vs. Data Warehouse ELT

Process complex, continuous data on your cost-effective data lake for downstream use in your data warehouse. Greatly reduce your data warehouse costs for ingestion, compute and storage. Build pipelines using SQL and automation, reducing your data engineering overhead and time-to-value as well.

Additional Solutions by Use Case

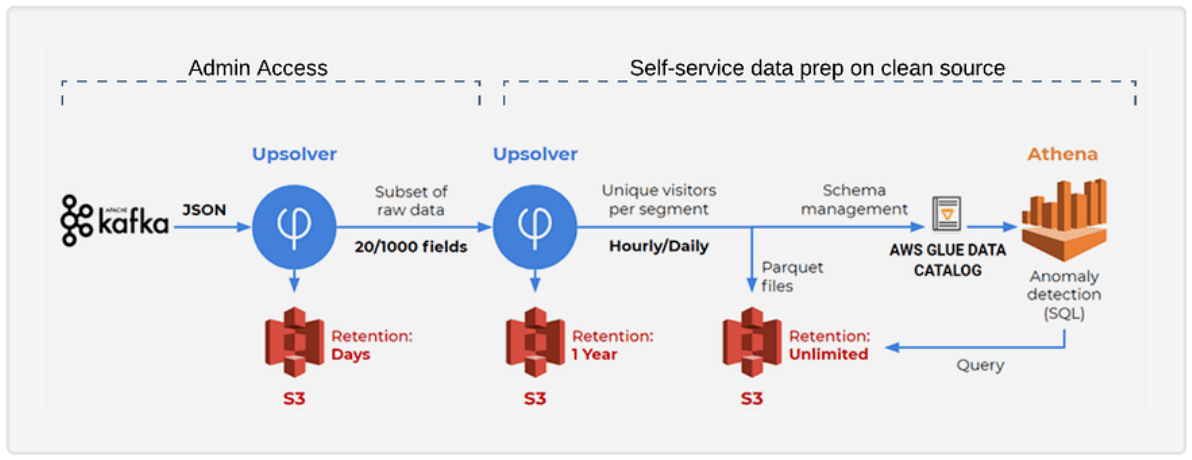

Log Analytics

Learn how Upsolver helps analyze log data in near real time.

Data Lake Ingestion

Store event streams as optimized Parquet, in a click.

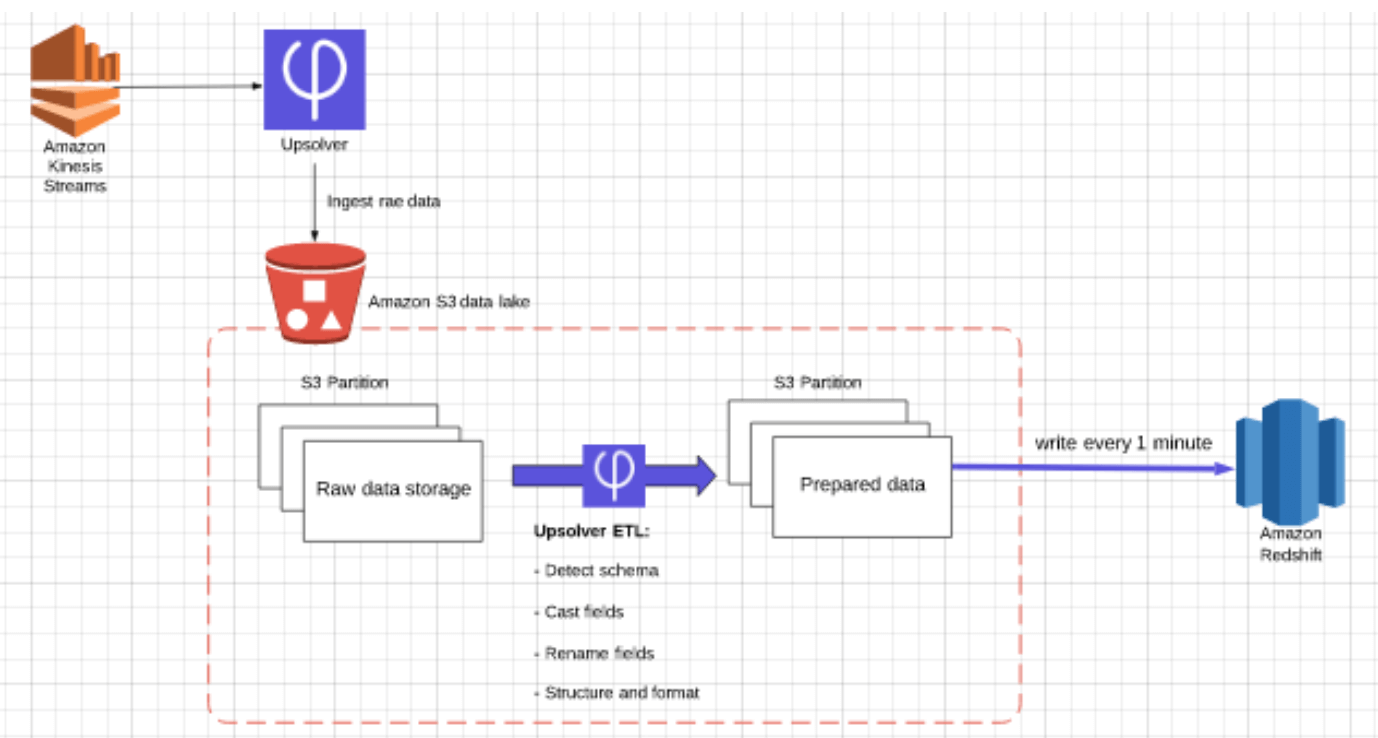

Kafka to Redshift

Get continuous data into analytics-ready Redshift tables.

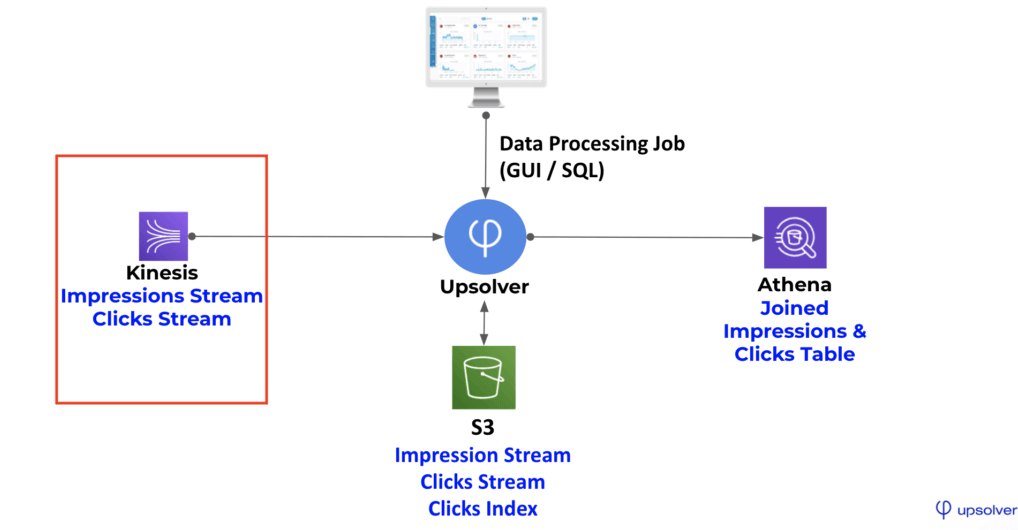

Kinesis to Athena

Simplify storage, reduce query costs, and improve performance.