Explore our expert-made templates & start with the right one for you.

Iceberg Architecture Examples: How Iceberg powers data and ML applications at Adobe, Netflix, LinkedIn, Salesforce, and Airbnb

Exploring Iceberg? Don’t miss our Iceberg Table Optimization Techniques e-learning module, covering essential optimization techniques crucial for maintaining a healthy Iceberg architecture according to best practices. Watch FREE here

With many tech giants like Netflix now processing petabytes of data daily, there has been an influx in the number of new data lake storage solutions developed in recent years. One of the most notable is Apache Iceberg, a high-performance format for huge analytic tables.

At Upsolver, we’re all in on Iceberg, and are seeing growing interest in adopting Iceberg for a variety of engineering-focused use cases. To help explain the potential use cases and architecture of Iceberg, we’ll use this article to highlight several interesting use cases from top engineering teams that showcase this technology’s versatility and advantages over alternatives.

What is Apache Iceberg?

Apache Iceberg is an open table format initially developed by Netflix. It was open-sourced in 2018 as an Apache Incubator project before being released in 2020.

Iceberg is designed for slow-moving (hence the name!) petabyte-scale tables in the cloud. Tracking files individually rather than at the folder level, Iceberg helps data teams avoid data consistency and performance issues that have historically plagued Apache Hive.

Iceberg’s tracking of individual files also negates the need for expensive list operations. This leads to further performance improvements when performing operations such as querying data within a table.

How Iceberg is used by data and tech teams at Adobe, Netflix, LinkedIn, Salesforce, and Airbnb

In recent years, many engineering teams have adopted Iceberg as the foundation for their data lake or data lakehouse architecture. This choice is typically motivated by a desire for better performance, scalability, interoperability with data processing frameworks such as Apache Spark, incremental data processing, and compatibility with existing Hive-based data.

Here’s a quick rundown of how different engineering teams at large companies like Adobe, LinkedIn, and Netflix use Apache Iceberg within their workflows.

1. Airbnb migrates legacy HDFS to Iceberg on S3

Airbnb’s Data Warehouse (DW) storage was previously migrated from HDFS clusters to Amazon S3 for better stability and scalability. Although the engineering team had continued to optimise the workloads that operate on S3 data, specific characteristics of these workloads introduced limitations that Airbnb’s users regularly encounter.

One of the biggest challenges was the company’s Apache Hive Metastore, which, with an increasing number of partitions, had become a bottleneck, as had the load of partition operations. Airbnb’s engineers added a stage of daily aggregation as a workaround and kept two tables for queries of different time granularities, but this was a time sink.

This motivated the Airbnb team to upgrade its DW infrastructure to a new stack based on Apache Iceberg. From the get-go, this solved many of the company’s challenges:

- Iceberg’s partition information is not stored in the Hive Metastore, thus reducing a large amount of load from it.

- Iceberg’s tables don’t require S3 listings, which removes the list-after-write consistency requirement and, in turn, eliminates the latency of the list operation.

- Iceberg’s spec defines a standardized table schema, guaranteeing consistent behaviour across compute engines.

Source: Airbnb engineering

All in all, Airbnb experienced a 50% compute resource-saving and 40% job elapsed time reduction in its data ingestion framework with Iceberg and other open source technologies.

>> Read more on Medium

2. LinkedIn scales data ingestion into Hadoop

Professional social media platform LinkedIn ingests data from various sources, including Kafka and Oracle, before bringing it into its Hadoop data lake for subsequent processing.

Apache Gobblin FastIngest is utilised as part of this workflow for data integration, which, at the time of its implementation in 2021, enabled the company to reduce the time it takes to ingest Kafka topics into their HDFS from 45 to just five minutes.

LinkedIn’s Gobblin deployment works alongside Iceberg in the company’s Kafka-to-HDFS pipeline, leveraging its table format to register metadata to guarantee read/write isolation while simultaneously allowing downstream pipelines to consume data on HDFS incrementally.

In production, this pipeline runs as a Gobblin-on-Yarn application that uses Apache Helix to manage a cluster of Gobblin workers that continuously pull data from Kafka and write it in ORC format into LinkedIn’s HDFS. This significantly reduces ingestion latency. The inclusion of Iceberg enables snapshot isolation and incremental processing capabilities in their offline data infrastructure.

>> Read more on LinkedIn Engineering

3. Netflix builds an incremental processing solution to support data accuracy, freshness, and backfill

Netflix has an end-to-end reliance on well-structured and accurate data. However, as the company scales globally, its demand for data and scalable low-latency incremental processing is increasing.

Maestro, the company’s proprietary data workflow orchestration platform, is at the core of Netflix’s data operations. This provides managed workflow-as-a-service to the company’s data platform users. Maestro serves thousands of daily users, including data scientists, content producers, and business analysts across various use cases.

To provide a solution for its growing incremental processing needs, Netflix combined its Maestro platform with Iceberg to achieve incremental change capture in a scalable and lightweight way. This is achieved without copying any data, thereby enhancing reliability and accuracy while simultaneously preventing the unnecessary bloating of compute resource costs.

With this design, Maestro’s users can adopt incremental processing with low effort, mix incremental workflows with existing batch processes, and build new workflows much more efficiently. This bridges gaps for solving a variety of challenges in a more straightforward way, such as dealing with late-arriving data.

>> Read more on the Netflix tech blog

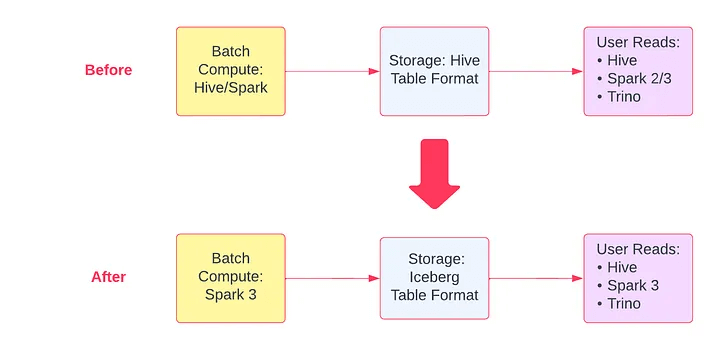

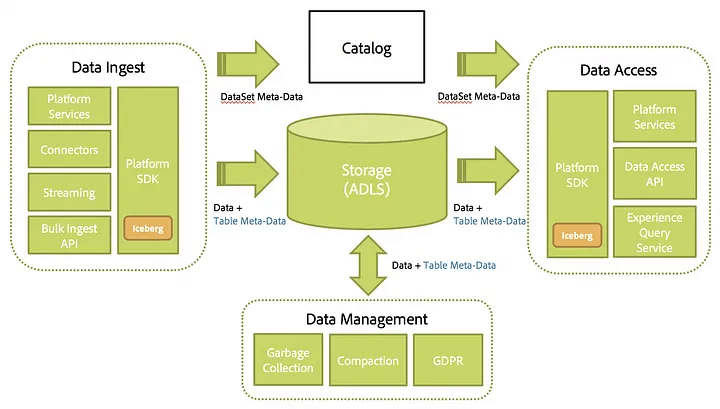

4. Adobe processes billions of events to personalize online experiences

Adobe was one of the earliest adopters of Iceberg in 2020 when it was integrated into the Adobe Experience Platform (AEP) in response to data reliability and scalability challenges.

According to Adobe, managing analytical datasets using Spark at the scale their customers operated had “proven to be a challenge” for reasons including:

- Data reliability: A lack of schema enforcement led to type inconsistency and corruption.

- Read reliability: Massive tables resulted in slower directories and file listing – O(n), and coarse-grained split planning, leading to inefficient data scans.

- Scalability: An overarching dependency on a separate metadata service risked a single point of failure.

Meanwhile, the AEP Data Lake was processing around 1 million batches and 32 billion events per day, which was set to increase dramatically as more Adobe solutions and customers migrated onto AEP in 2021.

Iceberg addressed many of these problems out of the box, and its lightweight design enabled Adobe to implement it without incurring any additional operational overhead and scale it horizontally with its Spark applications.

Source: Adobe

>> Read more on the Adobe developer blog

5. Salesforce uses Iceberg for ML Lake, its data platform for machine a learning

Salesforce continuously uses machine learning to improve its product suite, and the company’s ML Lake solution is the backbone of all its ML capabilities. This shared service manages data pipelines, storage, security, and compliance on behalf of ML developers.

ML applications typically interact with ML Lake by requesting metadata for a particular dataset containing a pointer to an S3 path that houses the data, requesting a data access token, and interacting with the data using S3 API or data tooling like Apache Spark.

As most of Salesforce data is structured with custom schemas, ML Lake needs to support structured datasets and allow partitioning and filtering of large data sets. It also needs to support consistent schema changes and data updates. While the current go-to standard for table formats is Apache Hive, it only addresses a small amount of ML Lake’s needs.

Faced with a need to scale not only in total data size but also the number and variety of datasets that it stores—Salesforce cannot maintain a selection of critical datasets due to the scope of its solutions—the company migrated to Apache Iceberg to leverage its better schema evolution. This is now the table format for all of ML Lake’s structured datasets.

>> Read more on Salesforce engineering

What are you building with Iceberg?

As we’ve seen, there has been a shift away from legacy data lake and warehousing solutions towards Apache Iceberg, which offers significant improvements in the form of schema evolution, partitioning, time travel, scalability, and interoperability. In many cases, companies are building large-scale ingestion architectures around Iceberg and other open-source tools such as Spark.

Upsolver can get you there faster. We make it easy to ingest streams, files, or change-data-capture workloads into your Iceberg-based data lake, at any scale. If you’re ingesting more than a terabyte of data, Upsolver’s optimized data ingestion guarantees you can save on storage and processing costs while improving performance. To benchmark the scale of improvement you’d see with Upsolver, create an account or try the open-source Iceberg Table Optimizer.