Explore our expert-made templates & start with the right one for you.

Finding the Geolocation of an IP Address

Use GEO_IP to to Extract IP Address Location

IP addresses are how computers communicate with each other. It is also how we track computers that communicate with our services – front-end applications, back-end servers, and databases. IP addresses are useful when tracking users through our site and to identify potentially malicious actors attempting to access our systems. Upsolver SQLake provides a useful function called GEO_IP that uses the MaxMind GeoIP2 service to look up the location of a given IP address. In this guide we walk through a few examples of how to use GEO_IP to extract the location information from IP addresses.

The GEO_IP function takes two string parameters:

- an attribute (or array of attributes) representing the geolocation field you want to extract

- an IP address.

The following is the function’s signature:

GEO_IP(attributes1[, attributes2, …, attributesN], IP Address)

In SQLake, you can use this function in a transformation job as shown below:

CREATE SYNC JOB transform_ip_with_geoip

RUN_INTERVAL = 1 MINUTE

ADD_MISSING_COLUMNS = TRUE

AS INSERT INTO catalog.prod_db.ip_with_location MAP_COLUMNS_BY_NAME

SELECT

ip_address,

GEO_IP('Country Name', ip_address) as ip_country

FROM catalog.stage_db.raw_data

WHERE $event_time BETWEEN RUN_START_TIME() AND RUN_END_TIME();

The output produced by this job would look something like the following:

| ip_address | country |

| “103.22.200.10” | “Japan” |

| “103.21.244.9” | “United States” |

Using SQLake to Find the Geolocation of an IP Address

There are 4 quick steps involved:

- Create a connection to Amazon S3 with sample data

- Create a staging table and job to ingest raw data

- Create a target table to hold enriched data

- Create transformation job that use GEO_IP to enrich the data

Let’s walk through the steps.

1. Create a connection to Amazon S3 with sample data

To help demonstrate how to use GEO_IP to extract multiple location information such as country and city name, we will use sample data hosted by Upsolver and made available through S3.

Create a connection to S3. The IAM role in the following snippet is provided for you to access the data in S3.

CREATE S3 CONNECTION upsolver_s3_samples AWS_ROLE = 'arn:aws:iam::949275490180:role/upsolver_samples_role' EXTERNAL_ID = 'SAMPLES' READ_ONLY = TRUE;

2. Create a staging table and job to ingest raw data

Create a staging table in the data lake to hold the raw data. This step is simple and you don’t need to know the raw schema; SQLake automatically detects it and updates the AWS Glue Data Catalog.

Create the staging table in the AWS Glue Data Catalog. Note that you must update the db_name to use your database name.

CREATE TABLE default_glue_catalog.<db_name>.sales_info_raw_data() PARTITIONED BY $event_date;

We’re using the $event_date system column to partition the staging table. SQLake attempts to detect whether the event contains an event date column to populate this field; otherwise SQLake uses the date the event was ingested.

After creating the table, we create an ingestion job that copies the raw events from the source S3 bucket into the target table. SQLake converts the data to columnar format, partitions it, and manages it over time to ensure best query performance.

Create the ingestion job to copy raw data into the target table.

CREATE SYNC JOB load_sales_info_raw_data_from_s3

CONTENT_TYPE = JSON

AS COPY FROM S3 upsolver_s3_samples

BUCKET = 'upsolver-samples'

PREFIX = 'sales_info/'

INTO default_glue_catalog.<db_name>.sales_info_raw_data;

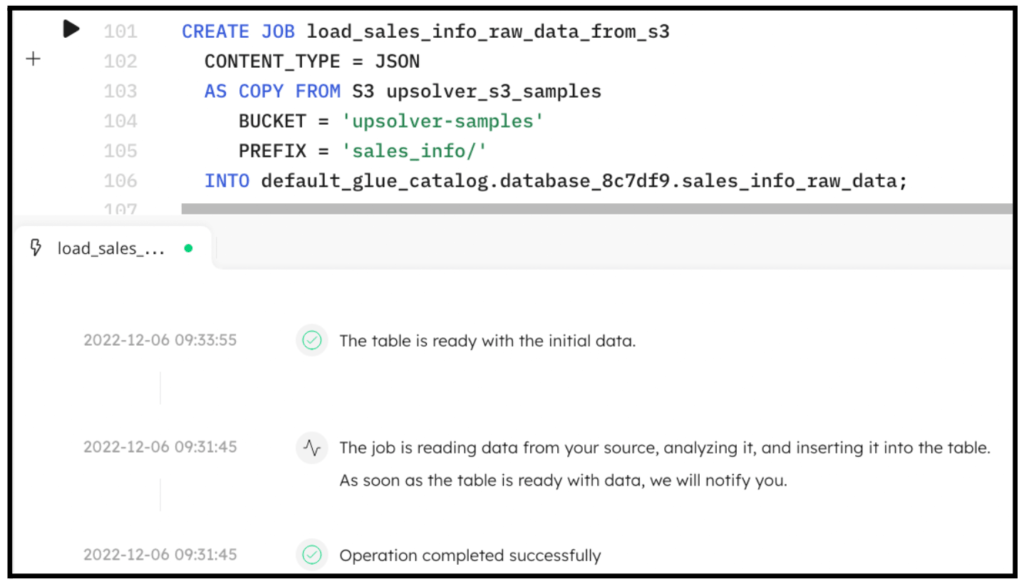

The following screenshot shows the execution of the ingestion job. In the console, SQLake reports the progress and notifies you when the data is available to query.

You can easily query the staging table directly from the SQLake worksheet. For example:

SELECT * FROM default_glue_catalog.<db_name>.sales_info_raw_data LIMIT 10;

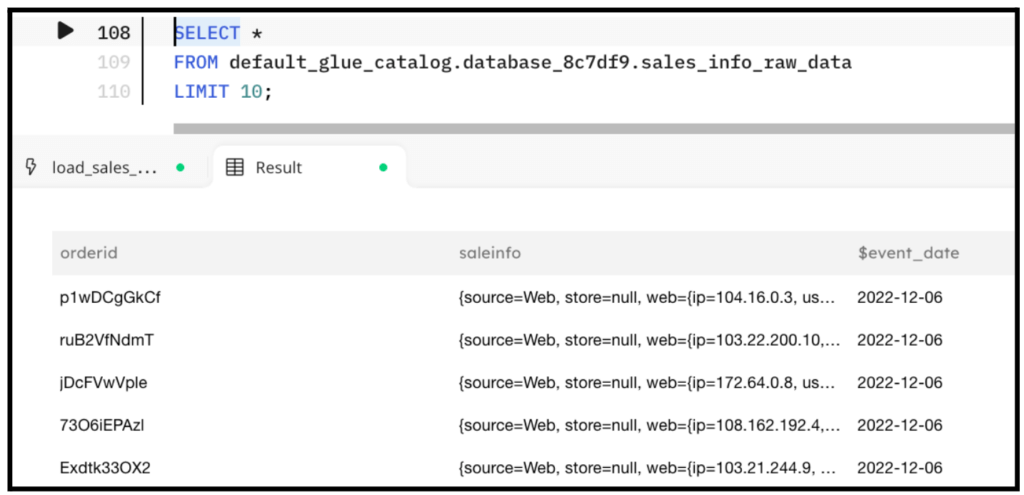

The following screenshot shows the results of the query. You can see the IP address as part of the salesinfo nested field. We will use this in the next step.

3. Create a target table to hold the enriched data

Now that the raw data is staged, create the target table in the AWS Glue Data Catalog to hold the enriched data.

Create the target table.

CREATE TABLE default_glue_catalog.<db_name>.sales_ip_enrichment ( partition_date date ) PARTITIONED BY partition_date;

Notice we’re defining partition_date column as the partition column for this table. This column will get its value from the $event_time column.

4. Create a transformation job that use GEO_IP to enrich the data

This is the step where the interesting work is performed. We will create a transformation job that uses the GEO_IP function to extract multiple attributes from the salesinfo.web.ip field.

Create the transformation job.

CREATE SYNC JOB enrich_ip_address

START_FROM = BEGINNING

RUN_INTERVAL = 1 MINUTE

ADD_MISSING_COLUMNS = TRUE

AS INSERT INTO default_glue_catalog.<db_name>.sales_ip_enrichment MAP_COLUMNS_BY_NAME

SELECT

orderid AS orderid,

saleinfo.web.ip AS ip_address,

GEO_IP('Continent Code',saleinfo.web.ip) AS ip_continent,

GEO_IP('Country Name',saleinfo.web.ip) AS ip_country,

GEO_IP('City Name',saleinfo.web.ip) AS ip_city,

GEO_IP('Subdivision 1 Name', saleinfo.web.ip) AS ip_subdivision,

GEO_IP('Time Zone', saleinfo.web.ip) AS ip_timezone,

$event_date AS partition_date

FROM default_glue_catalog.<db_name>.sales_info_raw_data

WHERE saleinfo.source = 'Web'

AND $event_time BETWEEN RUN_START_TIME() AND RUN_END_TIME();

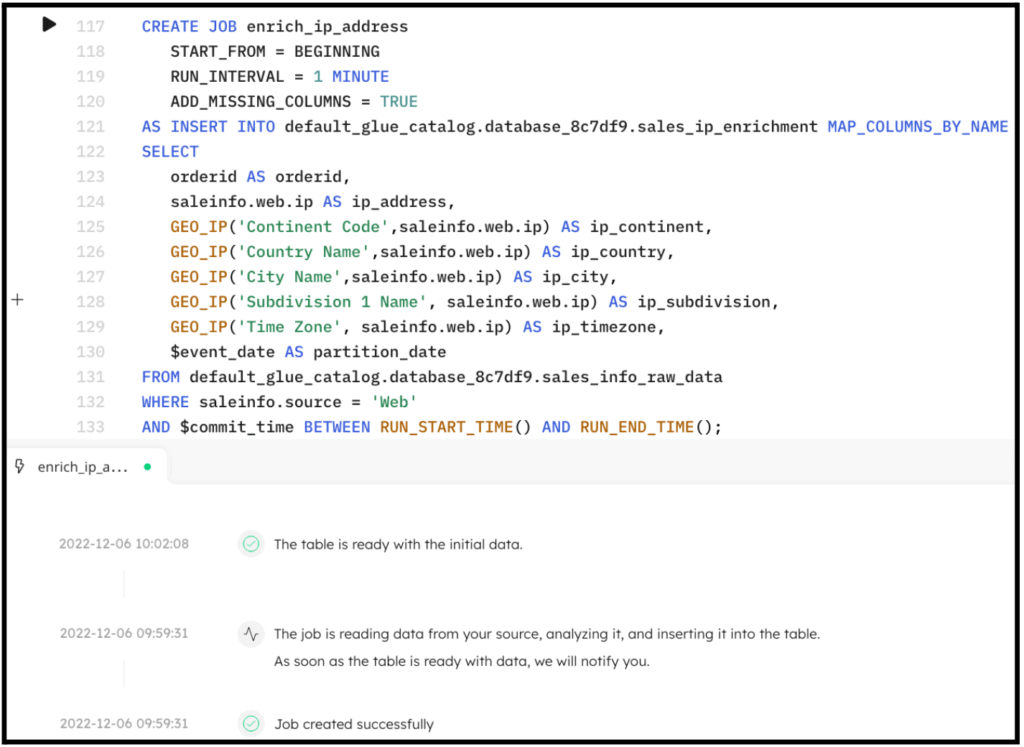

By executing the previous code in SQLake, the job is created. After a couple minutes the data is fully transformed and ready to query. The following screenshot shows the execution of the job.

Query the target table to see the results of the enrichment.

SELECT * FROM default_glue_catalog.<db_name>.sales_ip_encrichment LIMIT 10;

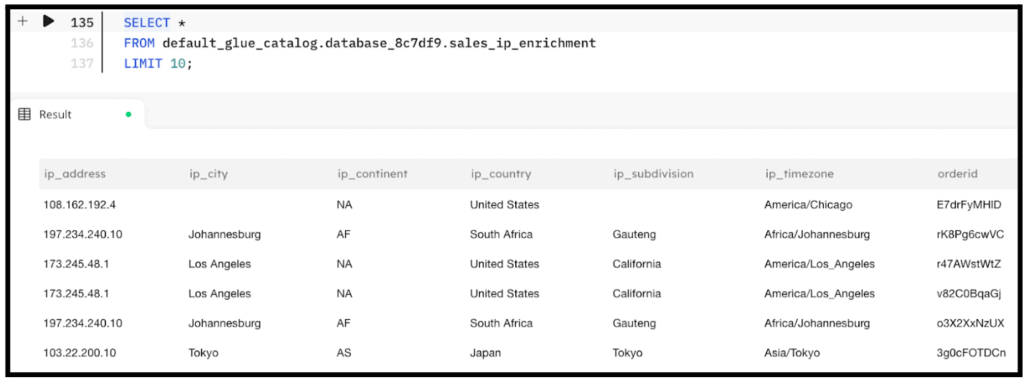

The output of the query looks similar to the following screenshot:

Summary

That’s it!! In only a few minutes and with just several lines of SQL you were able to ingest data from S3 into the data lake, extract location information from IP addresses using the GEO_IP function, and produce an enriched dataset that you can then use for analytics, reporting, auditing, and alerting.

SQLake makes it easy to work and extract meaning from data quickly. You can find more useful functions in the documentation page.

Get started today for free with sample data or bring your own.

Discover how companies like yours use Upsolver

Data preparation for Amazon Athena.

Browsi replaced Spark, Lambda, and EMR with Upsolver’s self-service data integration.

Read case studyBuilding a multi-purpose data lake.

ironSource operationalizes petabyte-scale streaming data.

Read case studyDatabase replication and CDC.

Peer39 chose Upsolver over Databricks to migrate from Netezza to the Cloud.

Read case studyReal-time machine learning.

Bigabid chose Upsolver Lookup Tables over Redis and DynamoDB for low-latency data serving.

Read case study

Start for free - No credit card required

Batch and streaming pipelines.

Accelerate data lake queries

Real-time ETL for cloud data warehouse

Build real-time data products