Explore our expert-made templates & start with the right one for you.

Easy button for high-scale data in

Enjoy the cost savings and shared access of a cloud-native lakehouse, without the engineering pain.

27 Trillion rows

ingested/month

50 PB

managed/month

35 GB / sec

peak throughput

Data movement at scale

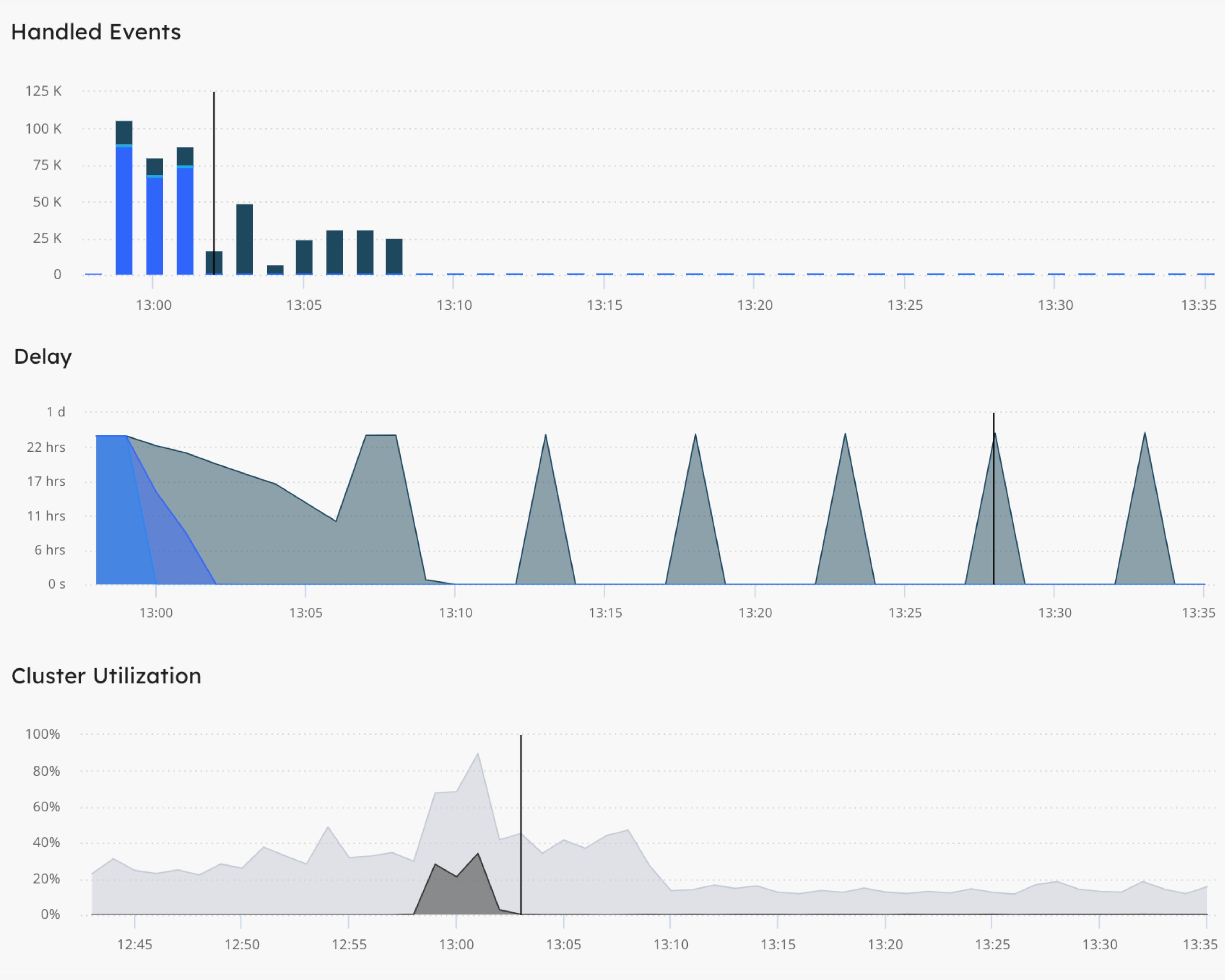

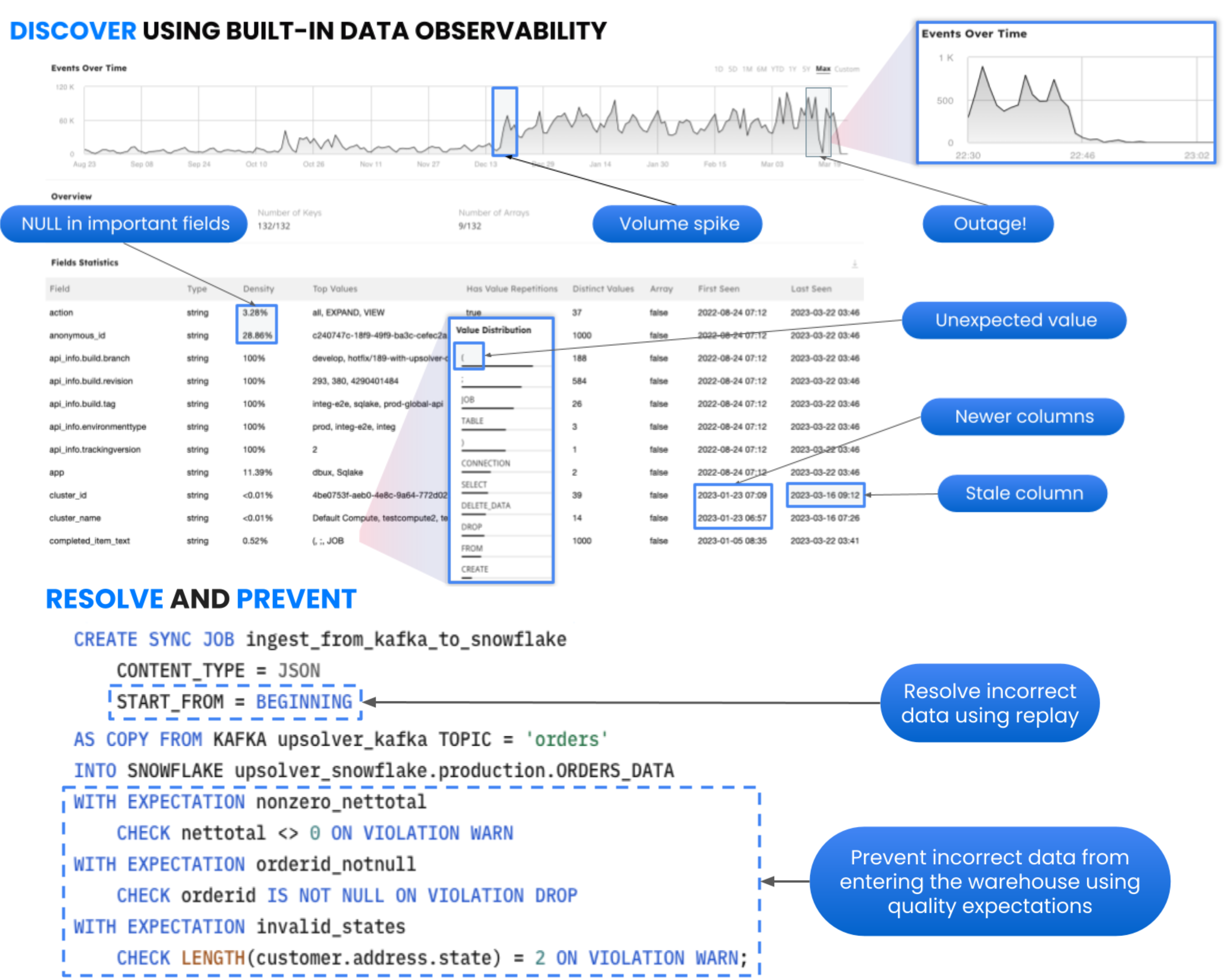

Ensure your critical data is delivered to downstream users reliably, accurately and on time.

When you combine high-volume data with frequent updates and in-flight processing, you need expert plumbing. Upsolver moves data from complex sources (streams, CDC, files) into your warehouse or lake reliably at scale.

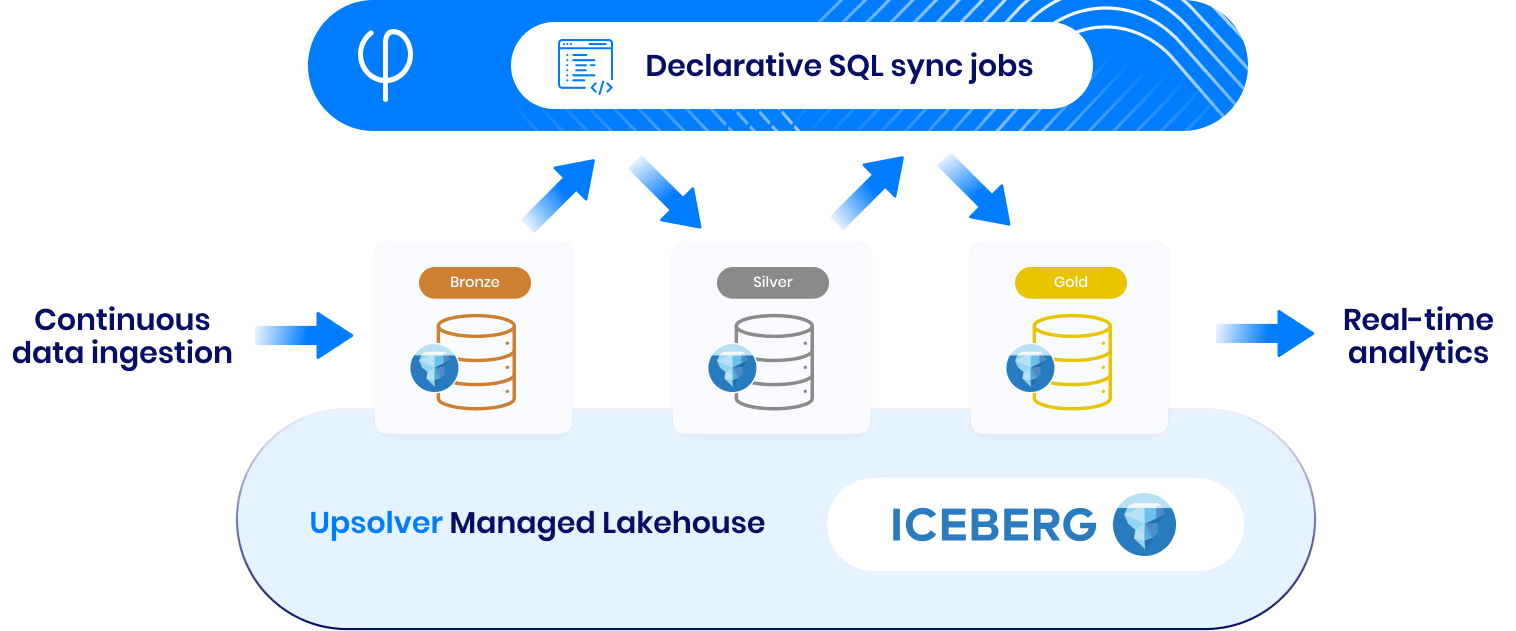

Open lakehouse control center

Support users from each business function to access data their way—but stay in charge.

Avoid functional silos and inconsistent performance in the lakehouse by using Upsolver as your control center. A single pane of glass for all your data, based on the open Apache Iceberg table format.

Build, monitor & share

Empower every part of your business with up to the minute data

Easily ingest data from operational stores, streams, and files into the warehouse and open Iceberg lakehouse. Then transform, observe, and manage your unified data from the control center.

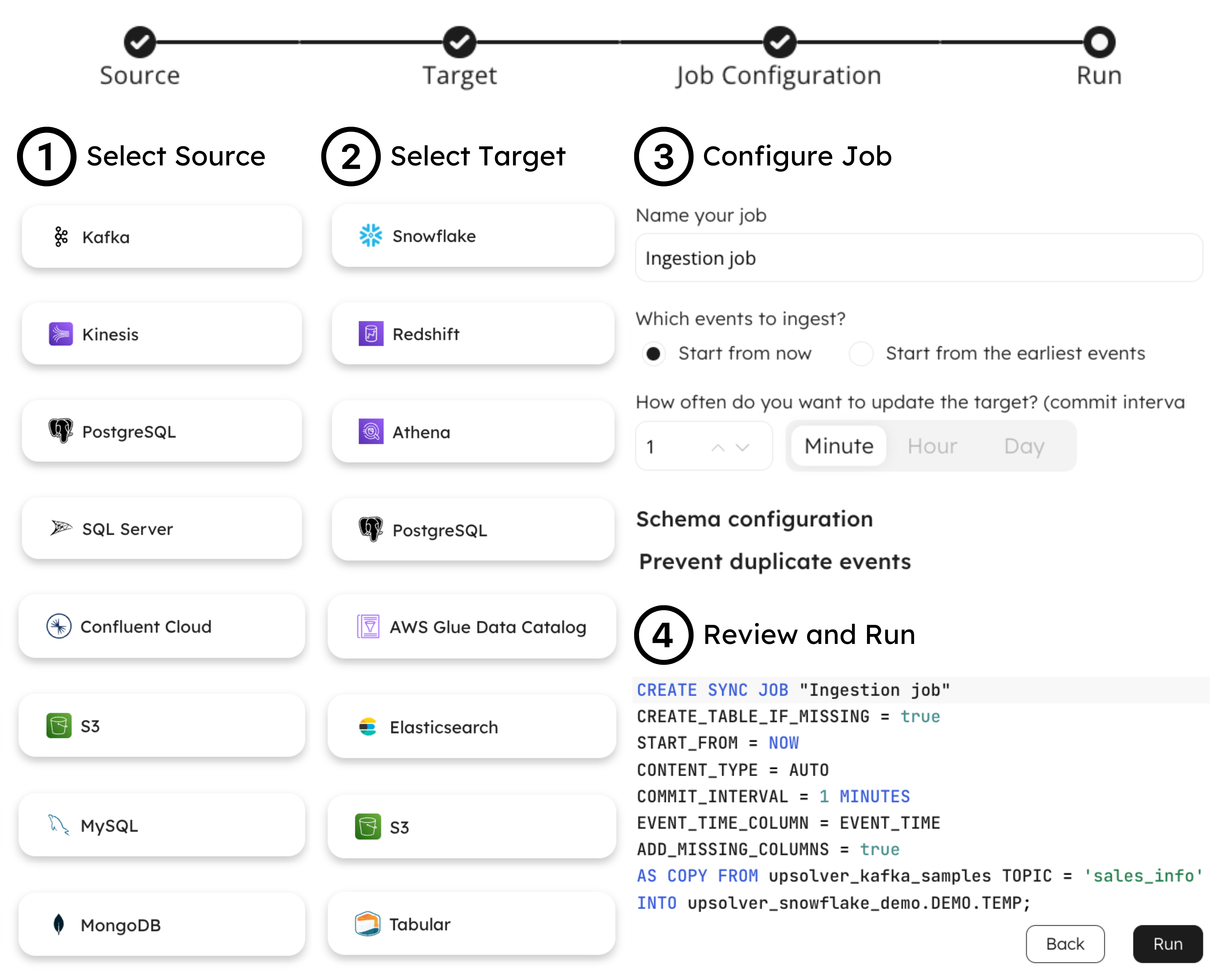

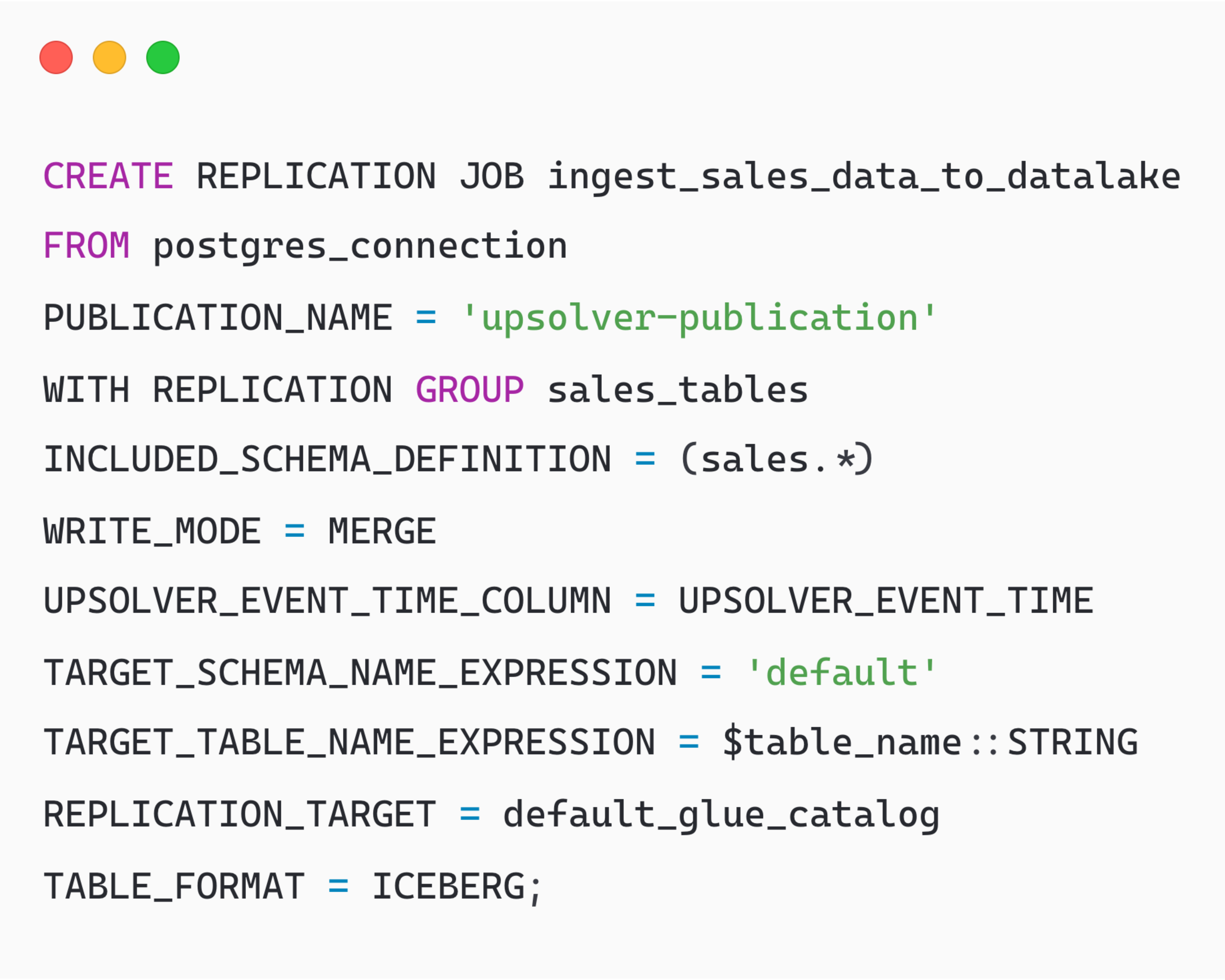

Zero-ETL ingest

data at scale

Transform with declarative SQL

Observe metrics

in real-time

Share with the

open ecosystem

We'll take care of performance

With continuous analysis and opportunistic optimization of your data movement jobs and lakehouse tables.

Unified data

Unified dev experience

Upsolver matches your development style and gives you the tools you need to ensure quality, on-time data.

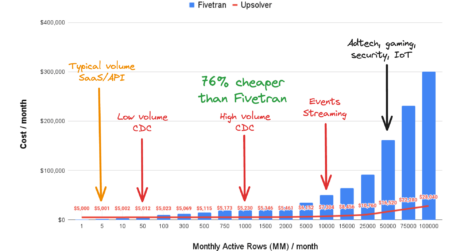

Designed and priced for scale

Upsolver charges by data volume. Competitor charges by ‘active rows’.

This key difference drives order-of-magnitude savings when data volumes scale

Empowering the next generation

of data developers

From startups to enterprises