Explore our expert-made templates & start with the right one for you.

Table of contents

Make your Snowflake Dollars Go 5X as Far

Using Upsolver as a SQL-based serving layer for Snowflake delivers up-to-the-minute data freshness – at tremendous scale – for a fraction of the cost.

Key Takeaways

- Cloud data warehouses struggle to prepare real-time data affordably.

- Upsolver offers a cost-effective serving layer that can process millions of events per second into fresh, query-ready Snowflake tables.

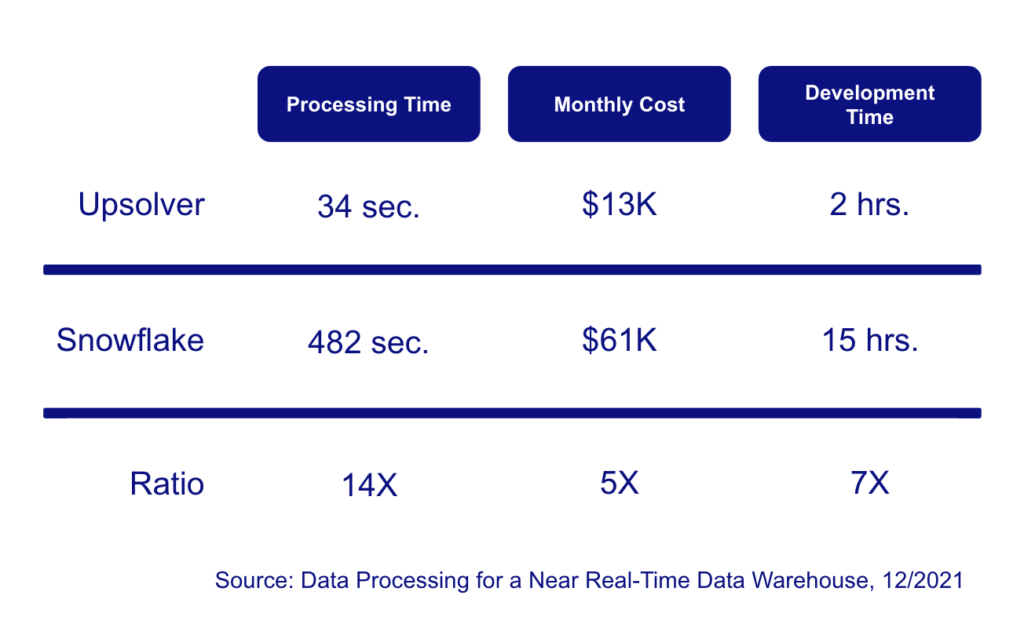

- A recent benchmark showed Upsolver provides 14X faster processing for 20% of the cost of the same job run in Snowflake.

Snowflake struggles with continuous data processing

If you’re moving from nightly to hourly or per-minute data freshness, you need to reconsider using your data warehouse for data processing (an ELT pattern). Snowflake and other cloud data warehouses were built for queries on batch data; they are slow and expensive when it comes to real-time processing of big data and streaming data. The excessive cost of continuous ingestion and transformation can drive the majority of a company’s data warehouse bill, often leading companies to deploy a separate ELT cluster to isolate expenses.

Data warehouse billing models, based on the clock time that a cluster is operating, are disadvantageous for continuous processing workloads. They are great for time-bounded batch ingestion and working hours queries, but become expensive when the warehouse is processing data 24/7. Also, creating and maintaining production-grade pipelines takes both valuable data engineering time and additional orchestrations software, such as Apache Airflow or dbt, that add costs and require specialized engineering effort to build and maintain.

Upsolver serves real-time tables to Snowflake at a fraction of the cost and engineering effort

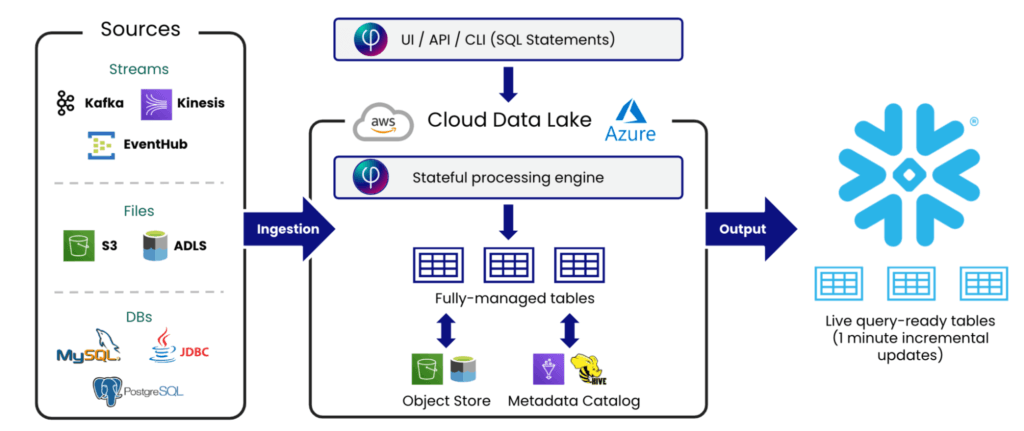

Upsolver is a data transformation engine that executes SQL commands to continuously prepare batch and streaming data on your cloud data lake, and output it to Snowflake and other analytics systems.

By processing data in this manner you can simply update output tables on your main Snowflake DW without the cost of a separate, expensive data prep cluster.

Key Upsolver features include:

- SQL statements to declare stateful operations – e.g. aggregations, joins, window functions

- Automated ingestion, orchestration and optimization (no Airflow or dbt required)

- Efficient target table updates (incremental ETL) in Snowflake

- Near-real-time – per minute micro-batching of all sources

- Affordable – commodity storage (e.g. S3) and low-cost compute (e.g. Spot instances).

Upsolver ingests batch files and streaming data at millions of events per second, performs stateful transformations in real-time, and continually updates Snowflake query-ready tables.

Benchmark findings

We recently benchmarked four approaches to implementing near real-time analytics using the Snowflake Data Platform:

- End-to-end latency: from raw data ingestion to analytics-ready data

- Costs: from both cloud infrastructure and human resources

- Agility: time to create each pipeline and configure the supporting systems

- Complexity of infrastructure: What the data team will need to maintain

The four methods we compared were:

- Upsolver to Snowflake: Data is ingested and processed by Upsolver before being loaded into Snowflake query-ready target tables.

- Snowflake Merge Command: The new data is matched and then merged into the existing table targets.

- Snowflake Temporary Tables: The new data is aggregated into a new temporary table before replacing the current production table, after a merge, and after deleting the old tables.

- Snowflake Materialized Views: Snowflake native functionality that allows customers to build pre-computed data sets that can improve query performance, but do come with a trade-off of additional processing time and cost.

Key findings

Using Upsolver with Snowflake provided lower costs, better freshness and shorter development time.

- Upsolver was 79% less expensive

- The cost of Upsolver was $12,600 per month vs. $61,000 per month for Snowflake.

- Upsolver provided per-minute freshness; Snowflake did not despite the high cost

- A Snowflake L cluster took over 7 minutes to process each minute of data

- Presumably, a 3XL DW would have provided per-minute freshness, albeit at 8 times the cost of the DW we tested.

- Upsolver took 1/7 the data engineering time

- As a declarative platform, Upsolver automated these activities; the developer uses SQL to specify source(s), target and desired output content.

- The Snowflake work included extracting the schema from the raw nested data, writing the shell script, and optimizing transformations.

Conclusion

Companies who want to feed their analysts and applications fresh data at big data scale need to augment their tried and true data platforms, such as data warehouses like Snowflake, with new technologies that were built to handle real-time data affordably. Upsolver provides a SQL-based data processing engine that, when coupled with Snowflake, will help companies avoid negative outcomes such as:

- Stale data for analytics provided to internal consumers or customers.

- Budget overruns from preparing large, continuous data sets in a data warehouse.

- Poor analytics agility due to data engineering bottlenecks.