Explore our expert-made templates & start with the right one for you.

Comparing Upsolver and Databricks for Data Lake Processing

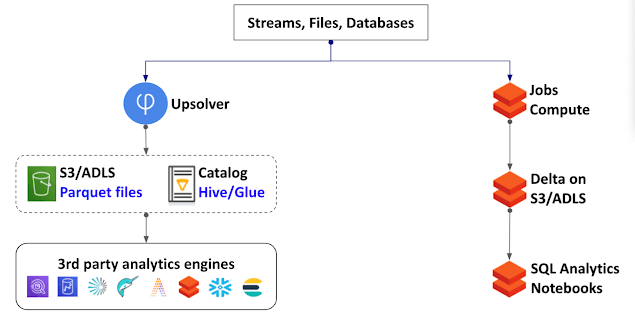

Upsolver and Databricks are two choices to consider platforms for building and running continuous workloads on your data lake. In this page we will highlight the advantages of each and how they relate to various use cases.

Databricks Jobs Compute is a data lake processing service that competes directly with Upsolver. Databricks SQL Compute is a query service that can query Upsolver tables. Databricks Notebooks can also run against Upsolver tables.

TL;DR - Which to use when

Criteria

-

Pipeline development interface -

Sink connectors -

Change data capture (CDC) -

Data lake tables optimization -

Jobs orchestration -

Schema evolution

Upsolver

-

Prefer using a visual interface and/or writing SQL commands -

Want native output connectors for Amazon Athena / Snowflake / Redshift / Elasticsearch / etc. -

You don't have a tool to generating the CDC log -

Automatic -

Automatic -

Automatic

DataBricks Job Compute

-

Prefer writing Java/Scala/Python code for execution over Spark -

Prefer to query data from the DataBricks eco-system using Delta Lake -

You already have a tool generating the CDC log -

Manual -

Manual, usually via 3rd-party tool (e.g. Airflow) -

Manual

See Upsolver in action - for free

Features comparison - connectors

Feature

-

Kafka / Amazon Kinesis / Azure Event Hub -

Amazon S3 / Google Cloud Srorage / Azure Data Lake Service / HDFS -

Databases (JDBC) -

Databases (log-based CDC) -

Built-in sink connectors

Upsolver

-

Yes -

Yes -

Yes -

Yes - also includes multi-table replication with one job -

Yes - including error recovery, throttling and upserts

DataBricks Jobs Compute

-

Yes -

Yes -

Yes -

No - requires 3rd party solution (Debezium, Attunity, DMS) and a job for each replicated table -

No - must implement using code

Features comparison - pipeline jobs development

Feature

-

Interface -

Strongly typed schema-on-read -

Joins and streaming joins -

Upserts over S3/ADLS -

Jobs orchestration

Upsolver

-

Visual drag-and-drop interface, synced with editable SQL including auto-complete. -

Yes -

Yes - executed as SQL commands -

Yes - for any Parquet-based table -

Automatic - inferred from requested transformations

DataBricks Jobs Compute

-

Java / Scala / Python -

No -

Yes - as code, and user has to manually define checkpoints and watermarks -

Yes - only for Delta tables which are coupled to DataBricks as a vendor -

Manual - must build DAGs, often with 3rd-party tools like Airflow

See Upsolver in action - for free

Features comparison - data lake tables optimization

Feature

-

Vacuum stale data -

Compaction of small files -

Late events handling -

Schema evolution

Upsolver

-

Automatic -

Automatic and continous (no locking) -

Automatic -

New columns detected and added automatically (e.g. SELECT * syntax)

DataBricks Jobs Compute

-

Manual -

Manual, locks table updates during operation -

Manual -

Manual detection of upstream changes, manual implementation.

Features comparison - deployment

Feature

-

SaaS control plane -

Data plane in customer VPC -

Elastic auto-scaling

Upsolver

-

Yes -

Yes -

Yes

DataBricks Jobs Compute

-

Yes -

Yes -

Yes

See Upsolver in action - for free

Features comparison - cost

Feature

-

Compute consumption-based model -

Use of AWS Spot instances for compute -

RAM requirements -

Additional key-value store -

Additional pipeline orchestration tool -

Additional tool for ingesting CDC logs

Upsolver

-

Yes -

Yes -

Low - uses in-memory compressed indexes for stateful processing -

No - built-in indexing engine for high cardinality stateful processing -

No - orchestration is auto-inferred from transformations and also includes maintenance operations like compaction - No - internally using the Debezium open source to replicate databases

DataBricks Jobs Compute

-

Yes -

Yes - High - Spark decompresses the state in RAM and an additional key-value store is often required (Cassandra/Redis/DynamoDB) to act as the index.

-

Yes - Spark state is heavy on RAM usage -

Yes - Databricks only supports scheduled notebooks execution -

Yes - can only replicate databases using JDBC

Start for free - No credit card required

Batch and streaming pipelines.

Accelerate data lake queries

Real-time ETL for cloud data warehouse

Build real-time data products