Explore our expert-made templates & start with the right one for you.

Table of contents

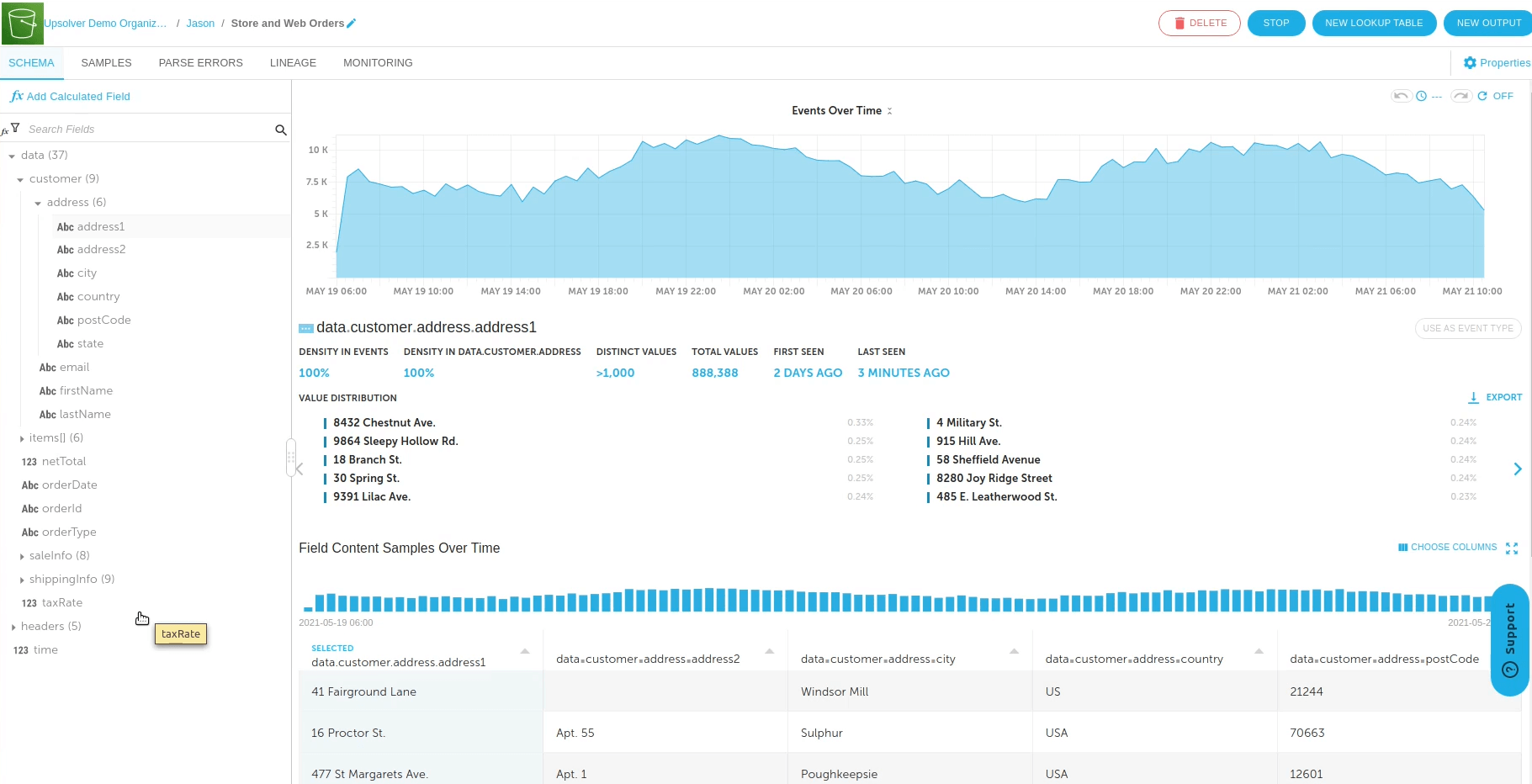

Upsolver automates the management and optimization of output tables so that data engineers only need to specify their transformations visually or in SQL and not worry about the “ugly data plumbing” needed to get to production.

The functions Upsolver automates include:

- Partition data

- Convert to column-based format

- Optimize file size (compaction)

- Upserts and deletes

- Vacuum stale and temporary files

- Orchestration

Partition data

Since object storage such as Amazon S3 doesn’t provide indexing, partitioning is the closest you can get to indexing in a cloud data lake. Partitioning data is typically done via manual ETL coding in Spark. When you’re trying to partition by event time, or employing any other partitioning technique that is not append-only, this process can get quite complex and time-consuming.

Upsolver will automatically partition the data on cloud object storage using best practices and Upsolver’s integration with the cloud metadata store. These partitions are continuously and automatically optimized to best answer the queries being run.

Convert to column-based format

Column-based formats are preferred for running fast and cost-effective analytics queries. Conversion of row-based cloud object storage to a column-based format is something Upsolver handles under the hood. Otherwise you would need to implement this manually in an ETL job.

Within Upsolver, you can select ORC or Parquet as the output format. Both of these formats offer the advantage of being open formats that are compressible and splittable. They also offer features that store data by employing different encoding, column-wise compression, compression based on data type, and predicate pushdown. Typically, enhanced compression ratios or skipping blocks of data involves reading fewer bytes from Amazon S3, resulting in enhanced query performance.

For each you configure:

- The stripe size or block size parameter—the stripe size in ORC or block size in Parquet equals the maximum number of rows that may fit into one block, in relation to size in bytes. The larger the stripe/block size, the more rows you can store in each block. The default ORC stripe size is 64MB, and the Parquet block size is 128 MB. We suggest a larger block size if your tables have several columns, to make sure that each column block is a size that permits effective sequential I/O.

- Data blocks parameter—if you have over 10GB of data, start with the default compression algorithm and test other compression algorithms.

- Number of blocks to be skipped—optimize by identifying and sorting your data by a commonly filtered column prior to writing your Parquet or ORC files. This ensures the variation between the upper and lower limits within the block is as small as possible within each block. This enhances its ability to be pruned.

Optimize file size (compaction) without locking files

Compaction is needed to ensure query performance on a raw data source comprising a huge number of very small files. Event-based streams from IoT devices, servers or applications will typically arrive in kb-scale JSON files, easily adding up to hundreds of thousands of new files being ingested into your data lake on a daily basis.

Writing small files to an object storage (Amazon S3, Azure Blob, HDFS, etc.) is easy enough; however, trying to query the data in this state using an SQL engine such as Athena or Presto will absolutely kill both your performance and your budget. Each file comes with its own overhead of milliseconds for opening the file, reading metadata and closing it. In addition, many files mean many non-contiguous disk seeks, which object storage is not optimized for.

If you’re using Upsolver, compaction is something you don’t need to worry about since it’s handled under the hood. It ensures that your file sizes are as big as possible while still fitting into memory. Plus, it regularly deletes uncompacted fields to save space and storage costs.

An important unique feature of Upsolver’s compaction process is that it allows access to the files while compaction is occurring so that your transformations continue to perform well.

Learn more about small files on S3.

Automating Upserts and Deletes for Strong Consistency

Updating or deleting data (upserts) is a basic function of a database, but is surprisingly difficult to do on top of a cloud object store. Upsolver enables automated efficient and quick update and delete operations on object storage.

In a database, upserts are barely an issue. Since databases have built-in indices, it is easy to pinpoint a specific record and to change that record or remove it completely. However, in a data lake, we are storing data in unstructured append-only object storage such as Amazon S3, Azure Blob Storage or HDFS. In this architecture, there’s no easy way to pinpoint a specific record, leading to expensive and close full scans. In the meantime, users don’t get a consistent, up-to-date view of the data.

The Upsolver platform lets you configure UPSERTs via a visual interface or as SQL command and then automatically and efficiently update and delete tables stored on object storage as part of ongoing streaming pipeline.

In Upsolver, you simply define an Update or Delete key for output tables. This tells Upsolver that the table is bound to get updates and deletes. As a result, Upsolver will run a two-phase process. In the first phase, new data is immediately written to object storage (append-only) so it will be immediately available for queries. What’s different is that users will query a view of the data that Upsolver created instead of the raw object store. The view’s only job is to return the last values that are associated with the table key. This ensures that the queried table is both immediately available and consistent with the latest state of the data.

To avoid performance issues with this approach as updates grow over time, Upsolver keeps a map between the table keys and the files that contain them. During the compaction process, files containing the same key are merged into a single file with one record per key (or zero if the last change was a delete). The process keeps changing the data storage layer so the number of scanned records on queries will be equal to the number of keys and not the total number of events.

Performing upserts as part of an ongoing data ingestion and storage optimization process has several advantages:

- Efficiency: Only the relevant data and partitions need to be read and rewritten, rather than the entire database

- Simple: Upserts are handled via a simple SQL query that does not require manual tuning

- Consistency: Since Upsolver indexes data and ensures exactly-once processing upon ingestion, there is a high level of certainty that all relevant records will be updated.

Vacuum stale and temporary files

Ingesting and transforming data on cloud object storage requires generating a lot of files to ensure performance. Under the hood, Upsolver continually “cleans up” the file system to ensure the detritus from these operational functions doesn’t create a performance or cost issue. As part of the process we ensure “safe deletes” by validating that a file is no longer needed for any pipeline before it is deleted.

Orchestrating it all

In traditional approaches to building data pipelines, you have to stitch together a number of DAGs that define each specific step in the pipeline, and decide what order in which to run these steps to optimize speed and cost.

Upsolver takes on this job for you. Using best practices obtained from decades of big data engineering expertise, we allow you to simply define your query visually or in SQL, and then we handle the rest, from the order of operations to the scheduling of the underlying file system optimization and maintenance operations in order to continually execute a healthy pipeline.