Explore our expert-made templates & start with the right one for you.

Use Redshift Streaming Ingestion, Redshift Serverless and Upsolver to Make Data Available for Analysis in Seconds

Amazon Redshift is the most widely-used cloud data warehouse. Redshift makes it easy and cost effective to perform analytics on vast amounts of data. Redshift Streaming Ingestion for Amazon Kinesis Data Streams (KDS) enables customers to load data into Redshift with low latency and without the need to stage the data in Amazon S3. This new capability enables customers to build reports and dashboards and perform analytics using fresh and current data, without the need to manage custom code that periodically loads new data.

Upsolver is an AWS Advanced Technology Partner that enables customers to ingest data from a wide range of sources, transform it, and load the results into their target of choice, such as Kinesis Data Streams and Redshift. Data analysts, engineers, and scientists define their transformation logic using SQL, and Upsolver automates the deployment, scheduling, and maintenance of the data pipeline. Pipeline Ops simplified!

This post is broken up into two parts:

- How to configure Upsolver to stream events to Kinesis Data Streams that are consumed by Redshift using Streaming Ingestion

- How to write event data to your data lake and consume it using Amazon Redshift Serverless so you can go from raw events to analytics ready datasets in minutes.

If you have any questions about using Streaming Ingestion with Upsolver, and/or anything related to building modern data pipelines, start the conversation in our Upsolver Community Slack channel.

Before you get started, you must have Upsolver installed. If you haven’t yet installed Upsolver, find it in the AWS Marketplace where you can deploy it directly into your VPC to securely access Kinesis Data Streams and Amazon Redshift.

Configuring Upsolver to stream events to Kinesis Data Streams that are consumed by Redshift using Streaming Ingestion

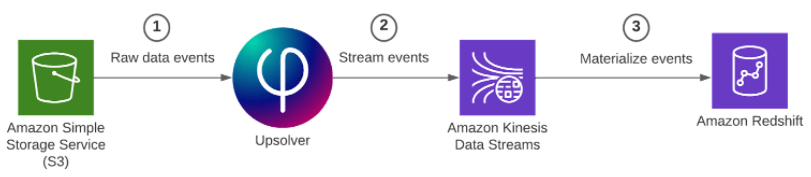

The following diagram represents the architecture to write events to KDS and Redshift:

To implement this solution, complete the following high-level steps:

- Configure the source Kinesis data stream.

- Execute the data pipeline.

- Create an Amazon Redshift external schema and materialized view.

Configure the source Kinesis data stream

For the purpose of this post you’ll create an Amazon S3 data source that contains sample retail data in JSON format. Upsolver ingests this data as a stream; as new objects arrive they are automatically ingested and streamed to the destination.

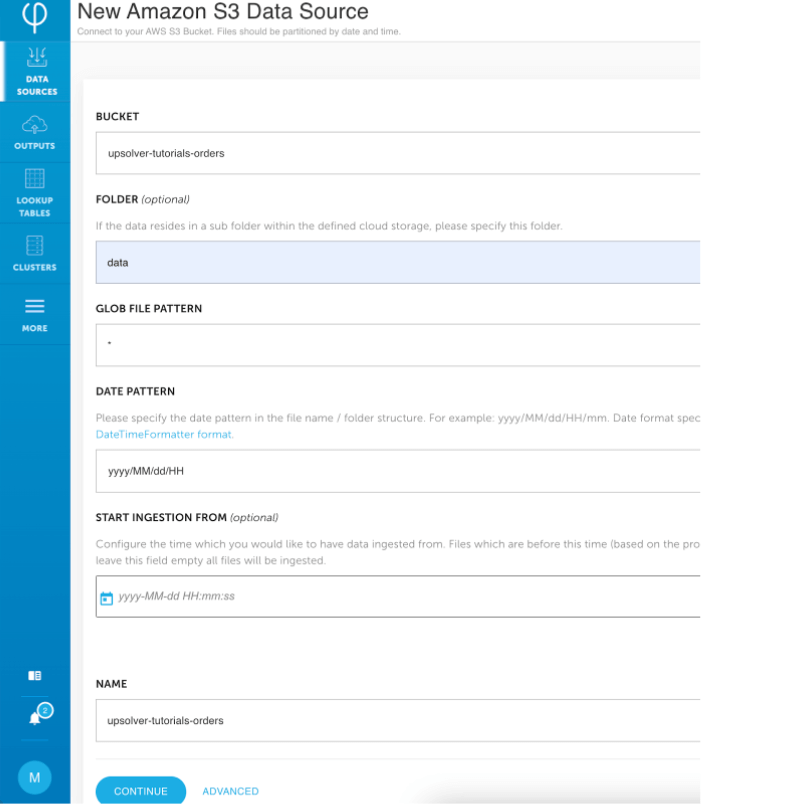

1. On the Upsolver console, select Data Sources, and in the top-right corner of the screen click New. Then select Amazon S3 as your data source.

- For Bucket, you can use the bucket with the public dataset (as shown in the below screenshot) or enter a bucket name with your own data.

2. To create the data source, click Continue.

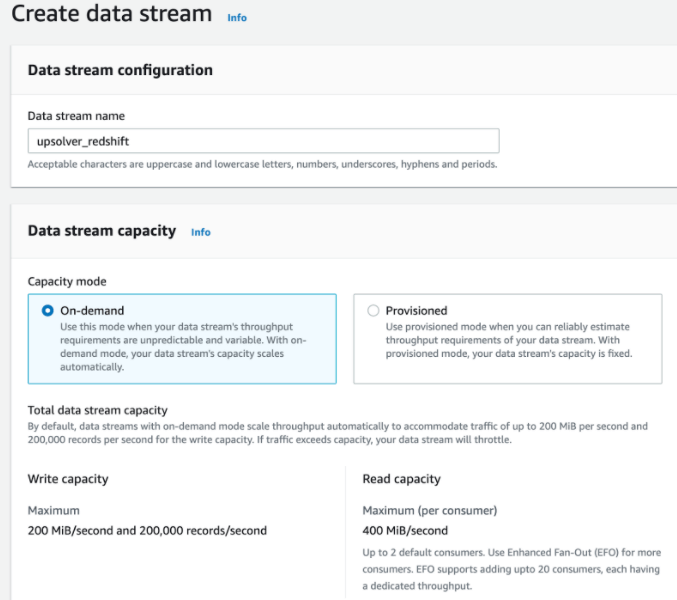

3. Create a data stream in Kinesis Data Streams. This is the output stream Upsolver uses to write events that will be consumed by Amazon Redshift.

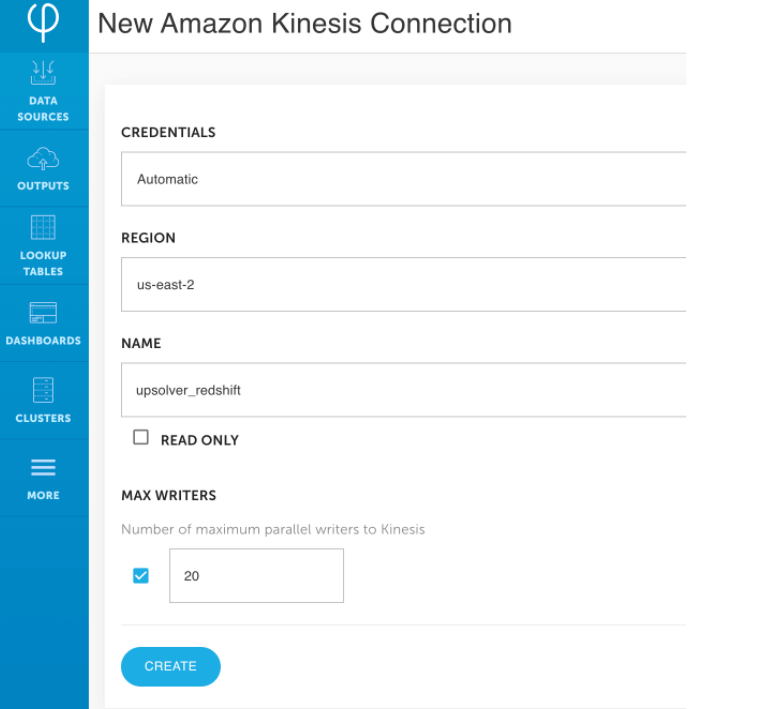

Now, still in Upsolver, create a Kinesis connection. Creating this connection enables you to define the authentication method Upsolver uses – for example, an AWS Identity and Access Management (IAM) access key and secret key or an IAM role. To create this connection:

- Click More and then click Connections.

- On the top righthand side of the screen click New Connection. Then scroll down and click Amazon Kinesis. For Region, enter your AWS Region. For Name, enter a name for your connection. (For this post, we’ve named it

upsolver_redshift, as shown below. ) - Click Create.

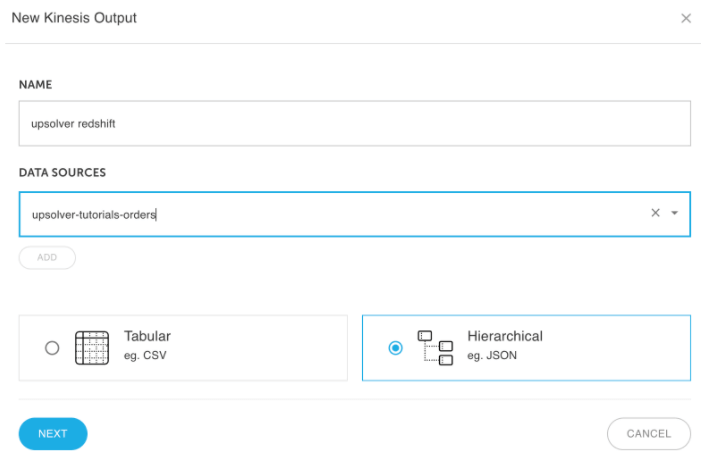

Before you can consume the events in Redshift, you must write the events to the output Kinesis data stream. To do this:

- From the Upsolver console navigate to Outputs and then click Kinesis.

- In the Data Sources box, choose the Kinesis data source you created in the previous step.

- Depending on the structure of your event data, you have two choices:

- If the event data you’re writing to the output doesn’t contain any nested fields, click Tabular. Upsolver automatically flattens nested data for you.

- To write your data in a nested format, click Hierarchical.

Because we’re working with Kinesis Data Streams, we’ll click Hierarchical.

Execute the data pipeline

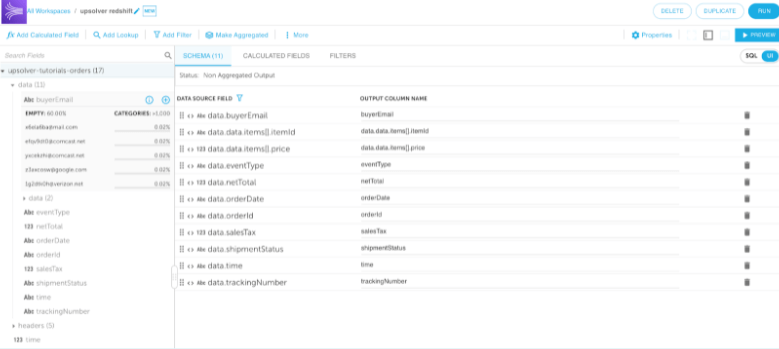

Now that the stream is connected from the source to an output, you must select which fields of the source event you wish to pass through. You can also opt to apply transformations to your data – for example, adding correct timestamps, masking sensitive values, and adding computed fields; read more about data transformations in the Upsolver documentation.

After adding the columns you wish to include in the output and applying transformations, in the top right corner of the screen click Run. This starts the data pipeline. As new events arrive in the source stream, Upsolver automatically transforms them and forwards the results to the output stream. There is no need to schedule or orchestrate the pipeline; it’s always on.

Create an Amazon Redshift external schema and materialized view

First, create an IAM role with the appropriate permissions as described in the Amazon Redshift documentation on streaming ingestion. Then use the Redshift query editor, AWS Command LIne Interface (AWS CLI), or API to run the following SQL statements.

1. Create an external schema that is backed by Kinesis Data Streams. This command requires you to include the IAM role you created by following the documentation.

CREATE EXTERNAL SCHEMA upsolver

FROM KINESIS

IAM_ROLE 'arn:aws:iam::123456789012:role/redshiftadmin';2. Create a materialized view that allows you to execute a SELECT statement against the event data that Upsolver produces.

CREATE MATERIALIZED VIEW mv_orders AS

SELECT ApproximateArrivalTimestamp, SequenceNumber,

json_extract_path_text(from_varbyte(Data, 'utf-8'), 'orderId') as order_id,

json_extract_path_text(from_varbyte(Data, 'utf-8'), 'shipmentStatus') as shipping_status

FROM upsolver.upsolver_redshift;3. Instruct Amazon Redshift to materialize the results to a table called mv_orders.

REFRESH MATERIALIZED VIEW mv_orders;4. Run your queries against your streaming data, such as the following:

SELECT * FROM mv_orders;Using Upsolver to write event data to the data lake and query it with Amazon Redshift Serverless

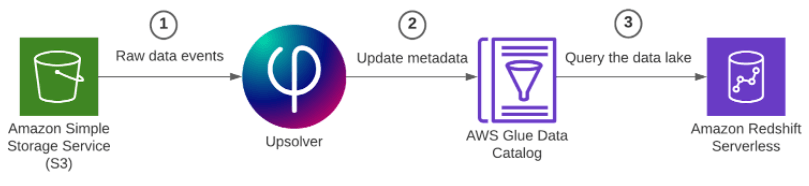

The following diagram represents the architecture to write events to the data lake and query the data with Redshift:

To implement this solution, you complete the following high-level steps:

- Configure the source Kinesis data stream.

- Connect to the AWS Glue Data Catalog and update the metadata.

- Query the data lake.

Configure the source Kinesis data stream

We already completed this step earlier in the post, so you don’t need to do anything different.

Connect to the AWS Glue Data Catalog and update the metadata

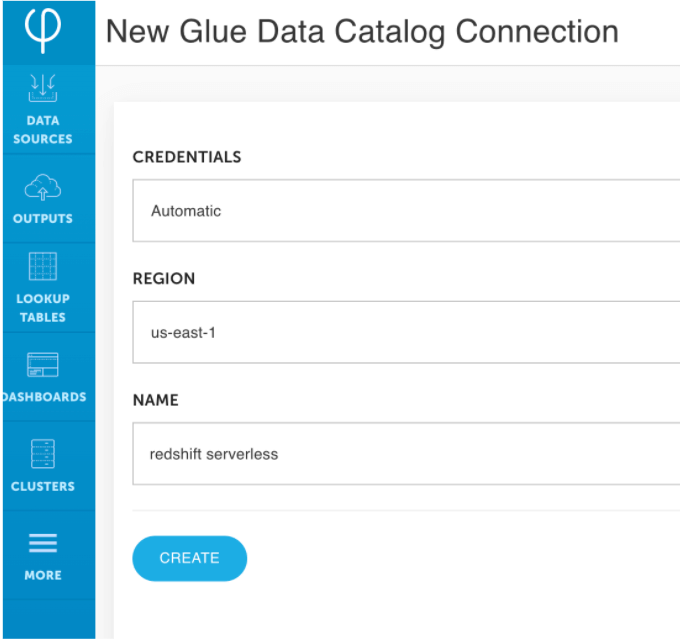

To update the metadata, complete the following steps:

1. In the Upsolver console, click More and then click Connections. Scroll down and select the AWS Glue Data Catalog connection. For Region, enter your region. For Name, enter a descriptive name. For this post, we call it redshift_serverless. Then click Create.

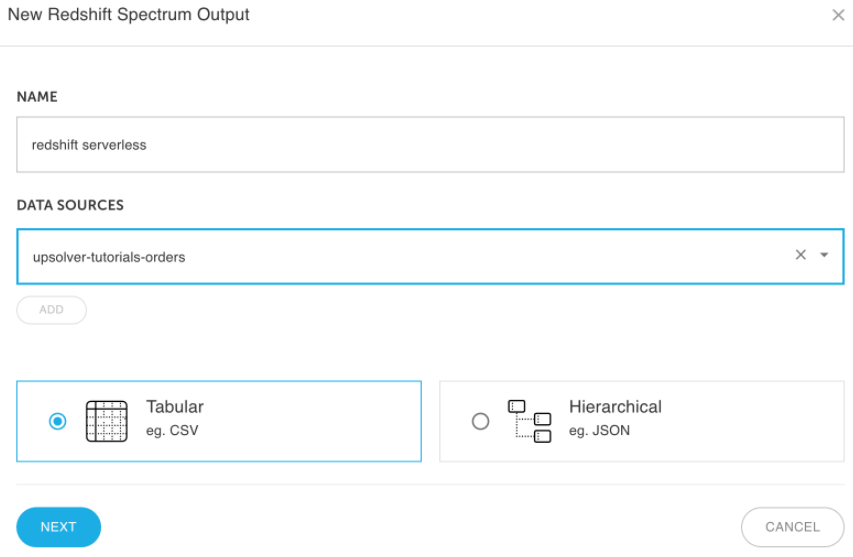

2. Create a Redshift Spectrum output following the same steps from earlier in this post. Click Tabular, as we’re writing output in table-formatted data to Amazon Redshift.

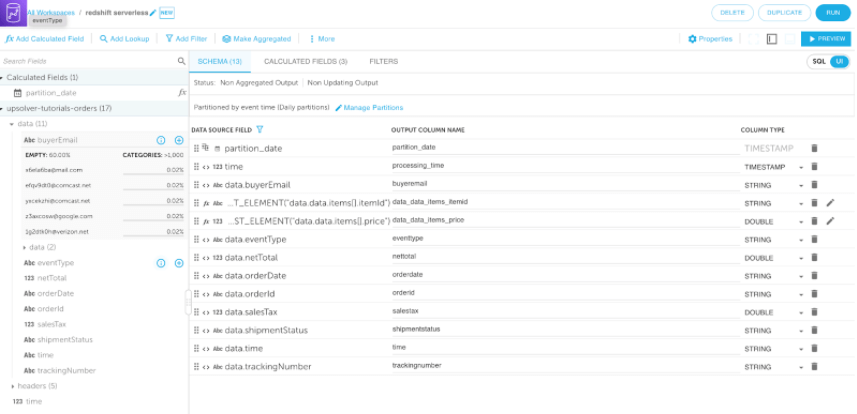

3. Map data source fields to the Redshift Spectrum output. Then, in the upper-right corner of the screen, click Run.

4. Switch back to your Amazon Redshift console and create an Amazon Redshift Serverless endpoint. To do this, follow the Amazon Redshift Serverless documentation. Ensure you associate your Upsolver role to Amazon Redshift Serverless.

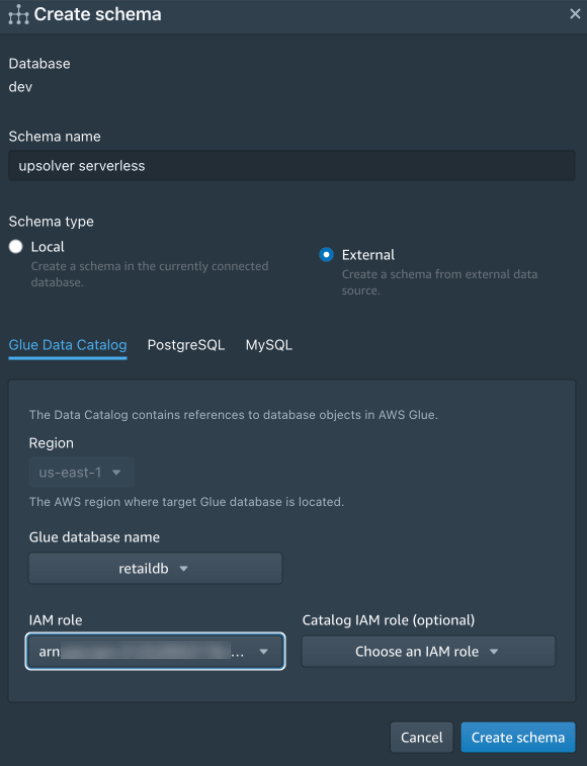

5. When the endpoint launches, open the new Amazon Redshift query editor to create an external schema that points to the AWS Glue Data Catalog (see the screenshot below). This enables you to execute queries against data stored in your data lake.

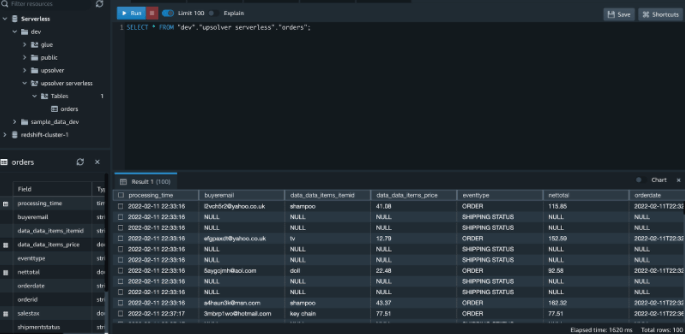

Query the data lake

Now that your Upsolver data is being automatically written and maintained in your data lake, you can query it using the tool of your choice and the Amazon Redshift query editor, as shown in the following screenshot:

Summary – Upsolver and Amazon Redshift

In this post you learned how to use Upsolver to stream event data into Amazon Redshift using streaming ingestion for Kinesis Data Streams. You also learned how you can use Upsolver to write the stream to your data lake and query it using Amazon Redshift Serverless.

Upsolver makes it easy to build data pipelines using SQL and automate the complexity of pipeline management, scaling, and maintenance. Upsolver and Amazon Redshift enable you to quickly and easily analyze data in real time.

This post was written by Mei Long, Roy Hasson, and Maneesh Sharma. Originally published by AWS. Republished with permission.

More Information About the Upsolver Platform

There’s much more information about the Upsolver platform, including how it automates a full range of data best practices, real-world stories of successful implementations, and more, at www.upsolver.com.

- To speak with an expert, please schedule a demo: https://www.upsolver.com/schedule-demo.

- Try SQLake for free (early access). SQLake is Upsolver’s newest offering. It lets you build and run reliable data pipelines on streaming and batch data via an all-SQL experience. Try it for free. No credit card required.

If you have any questions, or wish to discuss this integration or explore other use cases, start the conversation in our Upsolver Community Slack channel.