Explore our expert-made templates & start with the right one for you.

Apache Iceberg vs Parquet – File Formats vs Table Formats

TLDR: While both of these concepts are related, comparing Parquet to Iceberg is asking the wrong question. Parquet is a columnar file format for efficiently storing and querying data (comparable to CSV or Avro). Iceberg is a table format – an abstraction layer that enables more efficient data management and ubiquitous access to the underlying data (comparable to Hudi or Delta Lake).

Moving from the comparison of Parquet and Iceberg, the key discussion should be on lakehouse versus data lake architectures. We created an e-Learning module for a detailed exploration, available for viewing here.

File Formats vs Table Formats

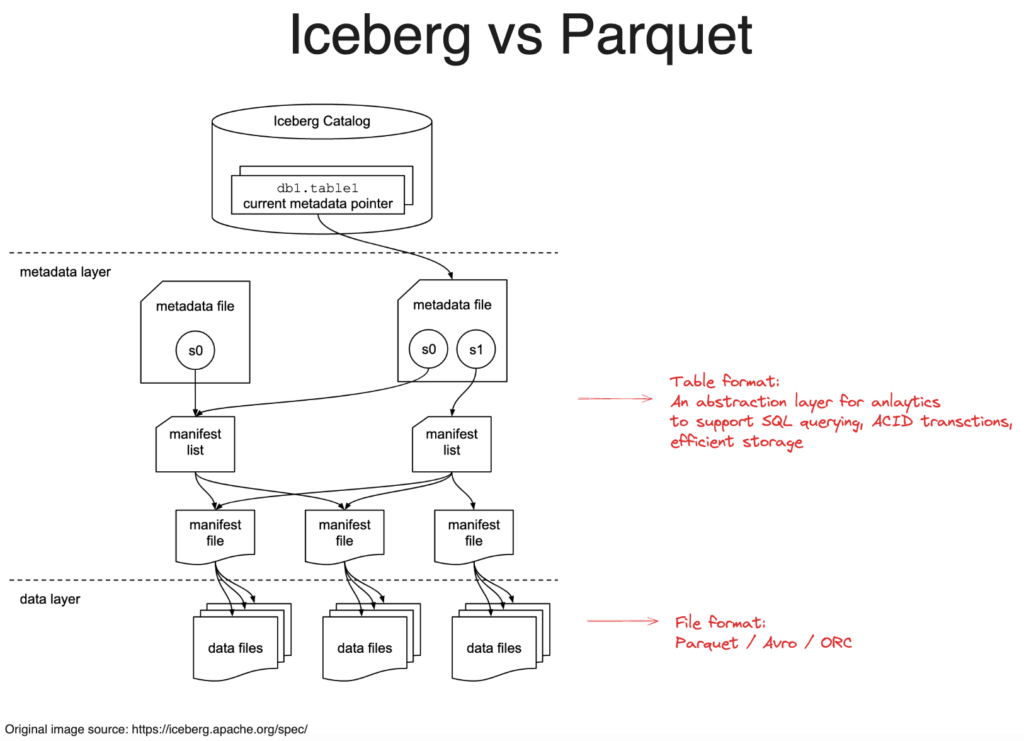

The key difference between a file format like Parquet and a table format like Iceberg is their purpose. File formats focus on efficient storage and compression of data. They define how the raw bytes representing records and columns are organized and encoded on disk or in a distributed file system such as Amazon S3.

In contrast, table formats provide a logical abstraction on top of the stored data to facilitate organizing, querying and updating. They enable SQL engines to see a collection of files as a table with rows and columns that can be queried and updated transactionally. For example, Iceberg tracks metadata about data files that allows engines to understand the table’s schema, partitions, snapshots and more. So while Parquet handles low-level representation and storage, Iceberg adds table semantics and capabilities like ACID transactions, time travel and schema evolution.

The following diagram is taken from the Iceberg table spec, with our notes in red:

The Relationship Between Parquet and Iceberg

Iceberg is an open table format, not tied to any specific file format. It is designed to work with multiple formats like Parquet, Avro and Orc. An Iceberg table abstracts a collection of data files in object storage or HDFS as a logical table.

Under the hood, the actual table data is still stored in splits of files in the underlying file system – usually in a format like Parquet. Iceberg layers on table management capabilities while still leveraging those underlying file formats. For example, when data is added or updated in an Iceberg table, new Parquet files may be written representing the changes. But from a SQL engine’s point of view, it interacts with the logical Iceberg table abstraction and doesn’t need to reason about the underlying files directly.

Iceberg Enables Ubiquitous Access to Cloud Data

One major motivation behind the development of Iceberg was enabling ubiquitous access to data stored in cloud object stores such as Amazon S3 or Azure Data Lake Storage. Rather than tying data to a specific query engine like Spark or Hive, Iceberg provides a way to manage tables centrally and expose them to multiple processing engines.

For example, a user may choose to use Presto for ad-hoc SQL querying over Iceberg tables, while periodically running ETL batch jobs with Spark that read and write from those same Iceberg tables. Iceberg’s capabilities around time travel, snapshots and transactions facilitate this concurrent access. The goal is avoiding silos and “copies of copies” by allowing many engines to leverage the same storage layer.

Need to Ingest Data Into Apache Iceberg Tables? Try Upsolver

- Upsolver is purpose-built for high scale data ingestion into data lakes and warehouses. It can reliably land billions of records per day into S3 and create query-ready Iceberg tables.

- Configuration is code-free, simply point Upsolver at your data sources like databases or event streams, and it will automatically land that data in Iceberg tables ready for analysis.

- This solves the common challenge of building and maintaining reliable, performant pipelines to land production data into cloud storage.

- Upsolver handles schema management, partitioning, compaction and layout optimization automatically behind the scenes so Iceberg tables can always be queried performantly in any engine that supports AWS Glue.

Start using Upsolver for free ↗